Discover the historical evolution of AI in finance, from early automation to cutting-edge technologies. Explore its impact on risk management, algorithmic trading, credit assessment, customer service, fraud detection, and cybersecurity. Gain insights into milestone advancements shaping the future of finance. Continue reading “Historical Evolution Of AI In Finance”

Experts Opinions On Recent NLP Advancements

Discover expert opinions on recent advancements in Natural Language Processing (NLP) and their potential implications across industries. Gain valuable insights on the current state, challenges, and future trends in NLP. Continue reading “Experts Opinions On Recent NLP Advancements”

Educational Resources For Understanding NLP Advancements

Looking to stay updated with NLP advancements? Explore books, online courses, research papers, tutorials, forums, and more educational resources in this article.

In today’s fast-paced world, the field of Natural Language Processing (NLP) is constantly evolving and pushing boundaries. To stay ahead of the curve and gain a comprehensive understanding of the latest advancements in NLP, it is crucial to have access to reliable and educational resources. This article aims to provide you with a concise overview of the diverse educational resources available that can help you navigate the complex world of NLP advancements, empowering you with the knowledge and skills needed to excel in this cutting-edge field.

Books

Introduction to Natural Language Processing

If you are new to the field of Natural Language Processing (NLP), an introductory book can provide a solid foundation for understanding the basic concepts and techniques. An Introduction to Natural Language Processing book covers topics such as tokenization, text classification, part-of-speech tagging, and sentiment analysis. Through clear explanations and practical examples, you will gain a deep understanding of the fundamentals of NLP.

Advanced Natural Language Processing

Once you have mastered the basics, an advanced NLP book can further enhance your knowledge and skills in this rapidly evolving field. Advanced Natural Language Processing books delve into more complex topics such as language generation, machine translation, discourse analysis, and semantic parsing. By exploring these advanced concepts, you will be well-equipped to tackle challenging NLP problems and develop innovative solutions.

The Future of NLP

As NLP continues to evolve, it is crucial to stay updated with the latest advancements and trends. The Future of NLP books provide insights into emerging technologies, research directions, and potential applications in various industries. These books explore cutting-edge topics like deep learning, transfer learning, and neural language models. By understanding the future of NLP, you can align your learning and research efforts with upcoming opportunities and challenges.

NLP in Machine Learning

NLP and Machine Learning are closely intertwined, and understanding their intersection is essential for unlocking the full potential of NLP. Books on NLP in Machine Learning provide a comprehensive overview of how NLP techniques can be applied and integrated with machine learning algorithms. These books cover topics such as feature extraction, text classification, sentiment analysis, and information retrieval. By exploring the synergy between NLP and machine learning, you can develop effective models and systems that leverage the power of both domains.

Online Courses

NLP Fundamentals

Online courses that focus on NLP fundamentals are ideal for beginners who want a structured learning experience. NLP Fundamentals courses cover topics such as text processing, language modeling, information extraction, and named entity recognition. By following the course curriculum and engaging in hands-on exercises, you will gain hands-on experience with NLP techniques and develop a strong foundation for further exploration.

Applied NLP Techniques

Applied NLP Techniques courses build upon the fundamental concepts and equip learners with practical skills to solve real-world NLP problems. These courses dive into topics such as text classification, sentiment analysis, question answering, and machine translation. Through a combination of lectures, coding assignments, and projects, you will learn how to leverage NLP techniques and tools to build robust and scalable applications.

NLP for Machine Learning

If you are already familiar with machine learning and want to specialize in NLP, NLP for Machine Learning courses are a great choice. These courses focus on the application of NLP techniques within the context of machine learning algorithms. You will learn how to preprocess text data, extract meaningful features, and train models for tasks such as text classification, sequence labeling, and text generation. By honing your NLP skills in the context of machine learning, you will be able to develop sophisticated models that can handle complex language tasks.

NLP and Deep Learning

NLP and Deep Learning courses combine the power of deep learning algorithms with NLP techniques to solve challenging language problems. These courses cover topics such as recurrent neural networks (RNNs), convolutional neural networks (CNNs), attention mechanisms, and transformer models. By understanding the underlying principles and architectures, you will gain the skills to design and train state-of-the-art models for tasks such as machine translation, sentiment analysis, and natural language understanding.

Research Papers

State-of-the-Art NLP Models

Research papers on state-of-the-art NLP models provide detailed insights into the latest advancements in the field. These papers showcase cutting-edge models that achieve state-of-the-art performance on benchmark datasets. By studying these papers, you can understand the novel architectures, training strategies, and techniques that contribute to the improved performance of these models. This knowledge can inspire you to innovate and develop new approaches in your own NLP research or applications.

NLP Breakthroughs and Innovations

NLP breakthroughs and innovations papers highlight novel ideas and techniques that push the boundaries of what is possible in NLP. These papers often introduce new methodologies, datasets, or evaluation metrics that challenge the existing norms in the field. By exploring these groundbreaking research papers, you can gain inspiration for your own research and contribute to the advancement of NLP.

NLP Applications in Various Fields

NLP has numerous applications in various fields such as healthcare, finance, social media analysis, and customer service. Research papers focusing on NLP applications in these fields provide insights into how NLP techniques can be adapted and applied to solve domain-specific problems. By studying these papers, you can understand the unique challenges associated with different application domains and identify opportunities for innovation.

Comparative Studies of NLP Approaches

Comparative studies of NLP approaches papers evaluate and compare different methods, algorithms, or models for a specific NLP task or problem. These papers provide valuable insights into the strengths and weaknesses of various approaches, allowing researchers and practitioners to make informed decisions when choosing the most suitable technique for a particular task. By analyzing these comparative studies, you can gain a comprehensive understanding of the landscape of NLP techniques and identify the most effective strategies.

Tutorials and Video Lectures

Introductory NLP Tutorials

Introductory NLP tutorials provide step-by-step guidance for beginners who want to understand and apply NLP techniques. These tutorials cover topics such as text preprocessing, tokenization, part-of-speech tagging, and basic text classification. By following these tutorials, you can gain hands-on experience with NLP tools and libraries and gain confidence in implementing NLP algorithms.

Advanced NLP Techniques Explained

Advanced NLP techniques explained tutorials are designed for learners who want to deepen their understanding of complex NLP concepts. These tutorials dive into topics such as word embeddings, sequence labeling, named entity recognition, and attention mechanisms. By following these tutorials, you can gain the knowledge and skills necessary to tackle more challenging NLP tasks and develop state-of-the-art models.

NLP Case Studies and Examples

NLP case studies and examples tutorials provide real-world examples and applications of NLP techniques in various domains. These tutorials walk you through the entire process of solving a specific NLP problem, from data preprocessing to model evaluation. By following these tutorials, you can learn practical techniques and best practices for applying NLP to address real-world challenges.

NLP Video Lectures by Experts

NLP video lectures by experts offer a comprehensive and engaging way to learn about the latest NLP advancements and trends. These lectures cover a wide range of topics, including NLP fundamentals, deep learning for NLP, natural language understanding, and language generation. By watching these video lectures, you can benefit from the expertise and insights of renowned researchers and practitioners in the field.

Online Forums and Communities

Active NLP Discussion Forums

Active NLP discussion forums provide a platform for professionals and enthusiasts to exchange ideas, ask questions, and engage in discussions related to NLP. These forums often have dedicated sections for different NLP topics, allowing users to access valuable insights and perspectives from a diverse community. By actively participating in these forums, you can expand your network, seek advice, and stay updated with the latest developments in the NLP community.

NLP Q&A Platforms

NLP Q&A platforms offer a space where users can ask specific questions related to NLP and receive answers from experts and experienced practitioners. These platforms allow you to clarify doubts, seek guidance on challenging NLP problems, and access valuable insights shared by the community. By utilizing these platforms, you can benefit from the collective knowledge and experience of the NLP community and solve problems more effectively.

Specialized NLP Groups on Social Media

Specialized NLP groups on social media platforms provide a convenient way to connect with like-minded individuals who share a passion for NLP. These groups often share the latest news, research papers, tutorials, and job opportunities related to NLP. By joining these groups, you can stay updated with the latest advancements in the field, collaborate on projects, and foster meaningful connections with professionals and researchers.

NLP Meetups and Events

NLP meetups and events offer invaluable opportunities to network with professionals, researchers, and practitioners in the NLP community. These events often feature talks, workshops, and panel discussions on a wide range of NLP topics, providing deep insights and inspiring discussions. By attending these events, you can meet experts in the field, exchange ideas, and stay up-to-date with the latest advancements in NLP.

Blogs and Websites

Top NLP Blogs for Beginners

Top NLP blogs for beginners offer a curated collection of articles and tutorials that cater to individuals who are new to the field of NLP. These blogs cover a wide range of topics, including NLP fundamentals, practical techniques, and industry trends. By following these blogs, you can gain valuable insights, practical tips, and guidance to kickstart your NLP journey.

NLP News and Updates

NLP news and updates websites provide real-time information and updates on the latest advancements and breakthroughs in the field of NLP. These websites aggregate news articles, research papers, and blog posts from various sources to keep you informed about the most recent developments. By regularly visiting these websites, you can stay up-to-date with the rapidly evolving landscape of NLP.

In-depth NLP Articles and Analysis

In-depth NLP articles and analysis websites offer comprehensive and detailed articles that explore complex NLP concepts, techniques, and research papers. These articles provide deep insights and analysis, helping you understand the nuances and intricacies of advanced NLP topics. By reading these articles, you can enhance your understanding of the theoretical foundations of NLP and gain a broader perspective on the field.

NLP Toolkits and Resources

NLP toolkits and resources websites provide a one-stop platform for accessing a wide range of NLP tools, libraries, datasets, and pre-trained models. These websites often include documentation, tutorials, and community support to help you effectively utilize these resources. By exploring these toolkits and resources, you can accelerate your NLP projects and leverage the expertise and contributions of the NLP community.

Academic Institutions and Research Centers

Leading Universities for NLP Research

Leading universities for NLP research offer specialized programs, courses, and research opportunities that focus on advancing the field of NLP. These universities often have dedicated research labs and centers that conduct cutting-edge research and collaborate with industry partners. By pursuing NLP studies at these universities, you can learn from renowned experts, work on impactful research projects, and gain a competitive edge in the field.

NLP Research Labs and Centers

NLP research labs and centers are dedicated institutions that conduct research, development, and innovation in the field of NLP. These labs and centers contribute to the advancement of NLP by conducting experiments, publishing research papers, and collaborating with industry and academia. By staying updated with the work of these labs and centers, you can gain insights into the latest research trends and opportunities for collaboration.

NLP Course Offerings

NLP course offerings by academic institutions provide structured and comprehensive programs for individuals seeking to specialize in NLP. These courses cover a wide range of topics, including NLP fundamentals, advanced algorithms, and applications in various domains. By enrolling in these courses, you can learn from experienced faculty, collaborate with peers, and gain hands-on experience with state-of-the-art NLP tools and techniques.

NLP Research Scholarships and Grants

NLP research scholarships and grants provide financial support to students and researchers who are pursuing innovative NLP projects. These scholarships and grants enable individuals to focus on their research without the burden of financial constraints. By applying for these opportunities, you can secure funding for your NLP research and contribute to the advancement of the field.

Industry Conferences and Events

NLP Conferences and Workshops

NLP conferences and workshops are premier venues for sharing the latest research findings, advancements, and applications in the field. These conferences bring together researchers, practitioners, and industry experts from around the world to present their work, exchange ideas, and establish collaborations. By attending these conferences and workshops, you can gain insights into the state-of-the-art in NLP, network with professionals, and showcase your own research.

Industry Experts’ Talks and Panels

Industry experts’ talks and panels provide valuable insights into the practical applications and challenges of implementing NLP in real-world settings. These sessions often feature talks by experienced professionals, panel discussions on NLP-related topics, and Q&A sessions. By attending these sessions, you can learn from the firsthand experiences of experts, gain practical knowledge, and get a glimpse into the industry trends and best practices.

NLP Startup Competitions

NLP startup competitions offer platforms for entrepreneurs and innovators to showcase their NLP-based products, services, and solutions. These competitions often attract a diverse range of startups that leverage NLP techniques in areas such as chatbots, virtual assistants, sentiment analysis, and recommendation systems. By participating in these competitions, you can gain exposure, validation, and potentially secure funding for your NLP startup.

Exhibitions of NLP Technologies

Exhibitions of NLP technologies provide opportunities to explore the latest advancements, products, and services in the field. These exhibitions showcase cutting-edge NLP technologies from industry leaders and startups. By attending these exhibitions, you can get hands-on experience with state-of-the-art NLP tools, interact with industry experts, and discover innovative solutions that can enhance your NLP projects.

NLP Open Source Projects

Popular NLP Libraries and Frameworks

Popular NLP libraries and frameworks provide a rich set of tools and functions to streamline the development and deployment of NLP projects. These libraries often include functionalities for text processing, feature extraction, deep learning, and model evaluation. By leveraging these libraries and frameworks, you can save significant time and effort in implementing NLP algorithms and focus on solving the core challenges of your projects.

Contributing to NLP Open Source

Contributing to NLP open source projects allows you to collaborate with the NLP community, improve existing tools, and develop new functionalities. Open source projects are often maintained by a community of developers who actively contribute to their enhancement and support. By contributing to these projects, you not only improve your coding skills but also give back to the community and contribute to the collective knowledge in NLP.

NLP Datasets and Corpora

NLP datasets and corpora play a crucial role in training and evaluating NLP models. These datasets often include annotated or labeled text data for various NLP tasks such as sentiment analysis, named entity recognition, and machine translation. By accessing and utilizing these datasets, you can train models that generalize well and achieve higher performance. Additionally, you can also contribute to the creation and curation of new datasets to further advance the field.

NLP Model Repositories

NLP model repositories are platforms where researchers and practitioners can access pre-trained models that are ready to be used or fine-tuned for specific NLP tasks. These repositories often host a wide array of models for tasks such as text classification, sequence labeling, text generation, and sentiment analysis. By leveraging these pre-trained models, you can save valuable time and resources in building and training models from scratch.

Collaborative Research Platforms

NLP Collaboration Platforms

NLP collaboration platforms enable researchers and practitioners to collaborate on NLP projects in a seamless and efficient manner. These platforms often provide features such as project management, version control, and team collaboration tools. By utilizing these platforms, you can streamline the research process, collaborate with team members, and accelerate the development of innovative NLP solutions.

Code Sharing and Version Control for NLP

Code sharing and version control platforms cater specifically to the needs of researchers and practitioners working on NLP projects. These platforms enable efficient sharing and collaborative development of NLP code and models. By utilizing these platforms, you can collaborate with others, track changes, and maintain a version history of your NLP codebase, ensuring reproducibility and facilitating seamless teamwork.

Collaborative NLP Research Networks

Collaborative NLP research networks bring together researchers, practitioners, and experts in the field to foster collaboration and knowledge exchange. These networks often organize workshops, conferences, and collaborative projects to facilitate networking and collaboration. By joining these networks, you can connect with peers, share ideas, and collaborate on cutting-edge NLP research projects.

Building NLP Research Communities

Building NLP research communities involves actively contributing to the development and growth of the NLP field. This can be achieved through organizing workshops, hosting webinars, moderating forums, or sharing research findings and insights. By actively participating in and contributing to NLP research communities, you can play a significant role in shaping the direction of the field and fostering collaboration and innovation.

Technical Review Of NLP Advancements In 2023

Discover the latest advancements in Natural Language Processing (NLP) in 2023. This technical review explores enhanced language models, improved text classification, multi-modal NLP, and deep learning architectures. Learn how NLP is revolutionizing communication and transforming various industries.

In this article, you will be provided with a comprehensive overview of the cutting-edge advancements in Natural Language Processing (NLP) that have emerged in 2023. As language technology continues to evolve at a rapid pace, it has significantly impacted various industries and transformed the way we interact with machines. From the introduction of more sophisticated language models to the advancement of sentiment analysis techniques, this technical review will delve into the latest breakthroughs and their implications for businesses and individuals alike. Get ready to explore the potential of NLP in revolutionizing communication and enhancing the efficiency of daily tasks.

Introduction

Natural Language Processing (NLP) is a subfield of artificial intelligence (AI) that focuses on the interaction between humans and computers using natural language. It involves the development and application of computational models to understand, analyze, and generate human language. In recent years, NLP has experienced significant advancements, driven by enhanced language models, improved text classification techniques, multi-modal NLP, and deep learning architectures. These advancements have led to a wide range of applications, including natural language understanding, machine translation, sentiment analysis, and question answering systems. However, NLP also faces challenges and limitations, such as data privacy concerns, bias and fairness issues, and the need for interpretable NLP models. Looking towards the future, continued advancements in deep learning, ethical considerations, domain-specific NLP, and the development of human-like conversational agents are expected to shape the future of NLP.

Overview of NLP

Definition of NLP

Natural Language Processing (NLP) is a branch of AI that focuses on the interaction between computers and human language. It involves the development of algorithms and models that enable computers to understand, analyze, and generate human language in a way that is meaningful and contextually relevant.

History of NLP

The field of NLP originated in the 1950s with the development of machine translation systems and early language processing techniques. Over the years, NLP has evolved through various stages, including rule-based systems, statistical models, and more recently, deep learning approaches. The advancements in computational power and the availability of large-scale datasets have greatly contributed to the progress of NLP, allowing researchers to develop more sophisticated models with improved performance.

Advancements in NLP

Enhanced Language Models

One of the major advancements in NLP has been the development of enhanced language models, such as OpenAI’s GPT-3. These models are trained on massive amounts of text data and are capable of generating human-like responses and understanding complex language structures. Enhanced language models have revolutionized various applications, including text generation, chatbots, and dialogue systems.

Improved Text Classification Techniques

Text classification is a fundamental task in NLP, and advancements in this area have greatly improved the accuracy and efficiency of classifying text data. Techniques such as deep learning, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have shown remarkable performance in tasks such as sentiment analysis, spam detection, and document categorization.

Multi-modal NLP

Multi-modal NLP involves the integration of different modalities, such as text, images, and audio, to improve the understanding and generation of human language. This approach has gained significant attention in areas like image captioning, video summarization, and speech-to-text translation. By incorporating multiple modalities, NLP models can capture more nuanced information, leading to more accurate and contextually relevant results.

Deep Learning Architectures for NLP

Deep learning has played a crucial role in advancing NLP by enabling models to learn complex patterns and representations from raw text data. Architectures such as recurrent neural networks (RNNs), long short-term memory (LSTM), and transformers have demonstrated superior performance in tasks like machine translation, named entity recognition, and text summarization. Deep learning models have the ability to capture both local and global dependencies in text, allowing for more comprehensive language understanding.

Applications of NLP

Natural Language Understanding

Natural Language Understanding (NLU) refers to the ability of computers to comprehend and interpret human language. NLP techniques have been widely applied in areas such as voice assistants, virtual agents, and customer support chatbots. By understanding user queries and intents, NLU systems can provide more accurate and personalized responses, improving the overall user experience.

Machine Translation

Machine translation is the task of automatically translating text from one language to another. NLP advancements have greatly improved the performance of machine translation systems. Neural machine translation models, which utilize deep learning architectures, have shown significant progress in generating more accurate and fluent translations across various language pairs.

Sentiment Analysis

Sentiment analysis involves the identification and extraction of subjective information, such as opinions, emotions, and sentiments, from text data. This has numerous applications in areas such as market research, social media analysis, and customer feedback analysis. NLP techniques, particularly deep learning models, have greatly enhanced the accuracy and efficiency of sentiment analysis, enabling organizations to gain valuable insights from large volumes of textual data.

Question Answering Systems

Question answering systems aim to automatically provide relevant answers to user queries, often in the form of natural language responses. NLP advancements have powered the development of sophisticated question answering systems, such as IBM’s Watson and Google’s BERT. These systems utilize techniques like information retrieval, semantic representation, and deep learning to analyze and interpret user queries, extracting relevant information from large knowledge bases to generate accurate and contextual answers.

Challenges and Limitations

Data Privacy and Security Concerns

As NLP capabilities continue to grow, concerns regarding data privacy and security become increasingly important. NLP models often require access to large amounts of user data, which raises concerns about data protection and potential misuse of personal information. As such, ensuring robust data privacy measures and ethical considerations are vital for the responsible development and deployment of NLP systems.

Bias and Fairness Issues

Another challenge in NLP is the presence of bias in language data and models. NLP models are trained on large datasets, and if these datasets are biased, the models can learn and perpetuate biased behavior or discriminatory patterns. This can lead to unfair outcomes or reinforce existing biases in automated systems. Addressing bias and fairness issues in NLP models is crucial to ensure equitable and unbiased treatment of users and to foster inclusivity.

Interpretable NLP Models

Deep learning models used in NLP, such as transformers, are known for their impressive performance, but they often lack interpretability. Understanding why a model made a particular prediction or inference is essential for building trust and ensuring transparency in NLP systems. Developing interpretable NLP models is an ongoing challenge that researchers are actively working on, aiming to strike a balance between performance and interpretability.

The Future of NLP

Continued Advancements in Deep Learning

The future of NLP is expected to witness continued advancements in deep learning techniques. Researchers will strive to develop more advanced architectures, fine-tune models on larger datasets, and explore novel training techniques to further improve the performance of NLP systems. This will enable NLP models to understand and generate language more accurately, leading to enhanced user experiences and improved application outcomes.

Ethical Considerations

Ethical considerations will play a pivotal role in the future development and deployment of NLP systems. Addressing concerns related to data privacy, bias, and fairness will be crucial to ensure responsible and ethical use of NLP technologies. Frameworks and guidelines for ethical NLP practices will need to be developed and followed by researchers, developers, and organizations to promote transparency and safeguard user interests.

Domain-specific NLP

NLP advancements are likely to focus on domain-specific applications, where models are tailored to specific fields or industries. By understanding the nuances and specific language patterns within a domain, NLP models can provide more accurate and contextually relevant results. For example, domain-specific NLP models can assist in medical diagnoses, legal research, or financial analysis, offering specialized support and improving overall decision-making processes.

Human-like Conversational Agents

The development of human-like conversational agents, often referred to as chatbots or virtual assistants, will continue to be a major area of focus in NLP research. These agents aim to provide natural and seamless interactions with users, simulating human-like conversation. Advancements in conversational agents will involve improving language understanding, response generation, and context awareness, enabling more engaging and effective human-computer interactions.

Conclusion

The advancements in NLP have revolutionized the field of artificial intelligence by enabling computers to understand, analyze, and generate human language. Enhanced language models, improved text classification techniques, multi-modal NLP, and deep learning architectures have propelled NLP applications in various domains. However, challenges related to data privacy, bias, fairness, and interpretability need to be addressed for responsible and ethical development and deployment of NLP systems. Looking towards the future, continued advancements in deep learning, ethical considerations, domain-specific NLP, and the development of human-like conversational agents will shape the future of NLP, promising more accurate, contextually relevant, and user-centric language processing capabilities.

Latest AI Innovations In Financial Risk Assessment 2023

Discover the latest AI innovations revolutionizing financial risk assessment in 2023. Explore advanced machine learning algorithms and predictive analytics models that enable professionals to make informed decisions with confidence.

In the rapidly changing landscape of the financial industry, staying ahead of risk assessment has become a paramount concern for professionals. As we approach 2023, the use of artificial intelligence (AI) is set to revolutionize financial risk assessment in ways we have never seen before. This article will explore the latest AI innovations that are shaping the future of financial risk assessment, from advanced machine learning algorithms to predictive analytics models. Discover how these technologies are revolutionizing the way professionals assess risk, enabling them to make informed decisions and navigate uncertainties with greater confidence and precision.

1. Data Analysis and Pattern Recognition

In the field of financial risk assessment, data analysis and pattern recognition play a crucial role in helping organizations identify and mitigate potential risks. Machine learning algorithms have emerged as powerful tools for risk assessment, enabling businesses to analyze vast amounts of data and identify patterns that may indicate potential risks.

1.1 Machine learning algorithms for risk assessment Machine learning algorithms offer a powerful approach to risk assessment by analyzing historical data and identifying patterns that may be indicative of future risks. These algorithms can be trained on large datasets to identify complex patterns and relationships that humans may not be able to detect. By leveraging machine learning algorithms, organizations can make more informed decisions regarding risk exposure and develop effective risk management strategies.

1.2 Predictive analytics for identifying patterns and trends Predictive analytics is a branch of data analytics that uses historical data to make predictions about future outcomes. In the context of financial risk assessment, predictive analytics can be used to identify patterns and trends that may indicate potential risks. By analyzing historical data and applying predictive models, organizations can gain insights into potential risks and take proactive measures to mitigate them.

1.3 Big data processing for improved risk assessment The advent of big data has revolutionized the field of financial risk assessment. Big data refers to the massive volume of structured and unstructured data that organizations have access to. By harnessing the power of big data processing technologies, organizations can analyze vast amounts of data in real-time and gain deeper insights into potential risks. This enables organizations to make more accurate risk assessments and develop effective risk management strategies.

1.4 Natural language processing for sentiment analysis Natural language processing (NLP) is a branch of artificial intelligence that focuses on the interaction between computers and humans through natural language. In the context of financial risk assessment, NLP can be used for sentiment analysis, which involves analyzing the textual data to determine the sentiment or emotion behind it. By analyzing social media posts, news articles, and other textual data, organizations can gain insights into public sentiment and identify potential risks.

2. Automation and Robotic Process Automation (RPA)

Automation and Robotic Process Automation (RPA) are transforming the way organizations conduct risk assessment processes. By automating manual and repetitive tasks, organizations can streamline their risk assessment processes and improve efficiency.

2.1 Automated data collection and verification One of the key challenges in risk assessment is the collection and verification of data. Automation can help organizations streamline this process by automatically collecting data from various sources and verifying its accuracy. By reducing manual intervention, organizations can speed up the risk assessment process and ensure the reliability of the collected data.

2.2 Streamlining risk assessment processes through RPA Robotic Process Automation (RPA) involves the use of software robots to automate repetitive tasks. In the context of risk assessment, RPA can be used to automate data entry, data reconciliation, and other manual tasks involved in the risk assessment process. By deploying software robots, organizations can streamline their risk assessment processes, reduce errors, and improve efficiency.

2.3 Efficient report generation and analysis using AI Generating reports and analyzing data are essential components of risk assessment. AI-powered tools can automate report generation and data analysis, enabling organizations to produce accurate reports in a fraction of the time. By leveraging AI, organizations can free up valuable resources and focus on more strategic tasks, such as risk mitigation and decision-making.

2.4 Automated compliance monitoring for risk mitigation Compliance monitoring is a critical aspect of risk assessment, ensuring that organizations adhere to regulatory requirements and industry standards. Automation can help organizations monitor compliance by automatically tracking relevant regulations and standards, analyzing data, and alerting stakeholders in case of any compliance breaches. By automating compliance monitoring, organizations can reduce the risk of non-compliance and avoid potential penalties.

3. Fraud Detection and Prevention

Fraud detection and prevention are significant challenges for organizations in the financial industry. The rise of AI technologies has opened up new possibilities for identifying and preventing fraudulent activities.

3.1 AI-powered anomaly detection techniques Anomaly detection involves identifying data points that deviate significantly from the normal behavior. AI-powered anomaly detection techniques can analyze large datasets and identify patterns that may indicate fraudulent activities. By leveraging machine learning algorithms, organizations can detect anomalies in real-time and take immediate action to prevent fraud.

3.2 Real-time monitoring of transactions and activities Real-time monitoring is essential for fraud detection and prevention. By analyzing transactional data in real-time, organizations can quickly identify suspicious activities and take immediate action to prevent potential losses. AI technologies can enable organizations to monitor transactions and activities in real-time, helping them detect and prevent fraud more effectively.

3.3 Behavioral biometrics for user authentication Behavioral biometrics involves analyzing patterns of human behavior as a means of authentication. By analyzing users’ typing patterns, mouse movements, and other behavioral traits, organizations can verify their authenticity and detect potential fraudulent activities. AI-powered behavioral biometrics can enhance the security of user authentication and help organizations prevent fraud.

3.4 Advanced AI algorithms for fraud prediction AI algorithms can analyze large amounts of data to identify patterns and trends that may indicate potential fraudulent activities. By combining AI algorithms with historical data, organizations can develop advanced fraud prediction models. These models can help organizations identify high-risk individuals or entities and take preventive measures to mitigate fraud.

4. Cybersecurity and Risk Management

In today’s digital age, cybersecurity is a top concern for organizations across industries. AI technologies offer innovative solutions for detecting and preventing cyber threats, as well as managing risks associated with cybersecurity.

4.1 AI-based threat detection and prevention AI technologies can be used to detect and prevent cyber threats in real-time. By analyzing network traffic, system logs, and other data sources, AI algorithms can identify potential threats and take immediate action to prevent cyber attacks. AI-powered threat detection systems can continuously monitor network activities and identify patterns that may indicate malicious activities.

4.2 Automated risk assessment and vulnerability analysis AI technologies can automate the process of risk assessment and vulnerability analysis. By analyzing system configurations, software vulnerabilities, and other factors, AI algorithms can assess the overall cybersecurity risk posture of an organization. This enables organizations to identify potential vulnerabilities and take proactive measures to mitigate risks.

4.3 Predictive modeling for proactive risk mitigation Predictive modeling involves analyzing historical data and developing models to predict future outcomes. In the context of cybersecurity, predictive modeling can be used to identify potential risks and vulnerabilities. By leveraging AI-powered predictive modeling, organizations can take proactive measures to mitigate risks and prevent cyber attacks.

4.4 AI-powered incident response and recovery In the event of a cybersecurity incident, organizations need to respond quickly and effectively to minimize damage and ensure business continuity. AI technologies can help organizations automate incident response processes, enabling them to detect, investigate, and respond to incidents in real-time. AI-powered incident response systems can analyze large amounts of data and provide actionable insights for decision-making during incident handling and recovery.

5. Regulation and Compliance

Regulation and compliance are critical aspects of risk management in the financial industry. AI technologies offer innovative solutions for automating compliance monitoring, regulatory reporting, and risk assessment.

5.1 AI-driven compliance monitoring and reporting AI technologies can automate compliance monitoring by analyzing regulatory requirements and identifying gaps in compliance. By leveraging AI algorithms, organizations can continuously monitor their operations and identify potential compliance risks. AI-powered compliance reporting systems can generate accurate and timely reports, ensuring compliance with regulatory requirements.

5.2 Automated compliance checks and audits AI technologies can automate compliance checks and audits, reducing the reliance on manual processes. By analyzing data and comparing it against regulatory requirements, AI algorithms can identify non-compliance issues and provide recommendations for corrective actions. Automated compliance checks and audits can help organizations ensure adherence to regulatory standards and mitigate compliance risks.

5.3 Intelligent regulatory risk assessment Risk assessment is a core component of compliance management. AI technologies can analyze large amounts of data and assess the regulatory risk associated with business operations. By leveraging AI-powered risk assessment models, organizations can identify potential compliance risks and develop effective risk mitigation strategies.

5.4 Natural language processing for regulatory document analysis Regulatory documents, such as laws, regulations, and standards, contain vast amounts of information that organizations need to comply with. Natural language processing (NLP) can help organizations analyze these documents and extract relevant information. By leveraging NLP, organizations can automate the analysis of regulatory documents and ensure compliance with the latest regulatory requirements.

6. Credit Risk Assessment

Credit risk assessment is a critical component of risk management for financial institutions. AI technologies offer innovative solutions for credit scoring, credit risk monitoring, and portfolio risk management.

6.1 AI algorithms for credit scoring and decision-making Credit scoring involves assessing the creditworthiness of individuals or entities based on their financial history and other factors. AI algorithms can analyze large amounts of data and develop advanced credit scoring models. By leveraging AI algorithms, organizations can make more accurate credit decisions and manage credit risk effectively.

6.2 Real-time credit risk monitoring and prediction Real-time credit risk monitoring is essential for financial institutions to manage their credit portfolios effectively. AI technologies can analyze transactional data, credit bureau information, and other data sources to monitor credit risk in real-time. By leveraging AI-powered credit risk monitoring systems, organizations can identify potential delinquencies or defaults and take proactive measures to mitigate credit risk.

6.3 Machine learning for portfolio risk management Managing the risk associated with a portfolio of loans or investments is a complex task. Machine learning algorithms can analyze historical data and develop models to predict portfolio risk. By leveraging machine learning, organizations can assess the risk associated with their portfolios and make informed decisions regarding risk exposure and diversification.

6.4 Improved credit risk modeling using AI Credit risk modeling involves developing models that can assess the creditworthiness of individuals or entities. AI technologies can enhance credit risk modeling by analyzing large amounts of data and identifying complex patterns. By leveraging AI-powered credit risk models, organizations can make more accurate credit decisions and manage credit risk effectively.

7. Portfolio Optimization and Management

Portfolio optimization and management are essential for maximizing returns and minimizing risks for financial institutions. AI technologies offer advanced tools for portfolio optimization, rebalancing, and asset allocation.

7.1 AI-driven portfolio optimization strategies Portfolio optimization involves selecting the optimal combination of assets to maximize returns and minimize risks. AI technologies can analyze historical data, market trends, and other factors to develop advanced portfolio optimization strategies. By leveraging AI-driven portfolio optimization strategies, organizations can enhance portfolio performance and achieve their investment objectives.

7.2 Machine learning for portfolio rebalancing and diversification Portfolio rebalancing and diversification are essential components of portfolio management. Machine learning algorithms can analyze historical data and develop models to identify the optimal allocation of assets. By leveraging machine learning, organizations can automate portfolio rebalancing and diversification processes, ensuring that the portfolio remains aligned with predefined risk tolerance levels.

7.3 Risk-based asset allocation using AI Asset allocation involves allocating investments across different asset classes based on their risk and return characteristics. AI technologies can analyze historical data, market trends, and other factors to develop risk-based asset allocation strategies. By leveraging AI-powered asset allocation models, organizations can make more informed decisions regarding asset allocation and minimize portfolio risks.

7.4 Predictive analytics for assessing portfolio performance Assessing the performance of a portfolio is essential for portfolio management. Predictive analytics can analyze historical data and develop models to predict portfolio performance. By leveraging predictive analytics, organizations can gain insights into the future performance of their portfolios and make informed decisions regarding investment strategies.

8. Stress Testing and Scenario Analysis

Stress testing and scenario analysis are important risk assessment techniques that help organizations evaluate the resilience of their portfolios and identify potential vulnerabilities. AI technologies offer innovative solutions for stress test simulations, scenario analysis, and real-time risk assessment.

8.1 AI models for stress test simulations Stress test simulations involve analyzing the impact of adverse events or scenarios on the performance of a portfolio. AI models can analyze large amounts of data and simulate stress test scenarios to assess the resilience of a portfolio. By leveraging AI-powered stress test simulations, organizations can identify potential vulnerabilities and develop strategies to mitigate risks.

8.2 Scenario analysis using machine learning techniques Scenario analysis involves analyzing the impact of different scenarios on the performance of a portfolio. Machine learning techniques can analyze historical data and identify patterns that may indicate how a portfolio will perform under different scenarios. By leveraging machine learning, organizations can conduct scenario analysis more accurately and identify potential risks associated with different scenarios.

8.3 Real-time risk assessment during extreme market conditions During extreme market conditions, such as market crashes or economic downturns, real-time risk assessment is essential for portfolio management. AI technologies can analyze market data, news feeds, and other data sources to assess the risk associated with different assets in real-time. By leveraging AI-powered risk assessment systems, organizations can make informed decisions regarding risk exposure and portfolio management during extreme market conditions.

8.4 Predictive analytics for identifying potential vulnerabilities Predictive analytics can help organizations identify potential vulnerabilities in their portfolios. By analyzing historical data and developing predictive models, organizations can gain insights into potential risks associated with their portfolios. By leveraging predictive analytics, organizations can identify potential vulnerabilities and develop strategies to mitigate risks proactively.

9. Market Risk Assessment

Market risk assessment involves evaluating the potential impact of market fluctuations on the performance of a portfolio. AI technologies offer advanced tools for market risk measurement, real-time monitoring of market trends, and predictive analytics for assessing market volatility.

9.1 AI-powered tools for market risk measurement AI technologies can analyze market data, historical trends, and other factors to measure market risk. By leveraging AI-powered tools, organizations can assess the potential impact of market fluctuations on the performance of their portfolios. AI-powered market risk measurement tools can provide accurate and timely insights into market risks, enabling organizations to make informed decisions regarding risk exposure and portfolio management.

9.2 Real-time monitoring of market trends and indicators Real-time monitoring of market trends is essential for managing market risk effectively. AI technologies can analyze real-time market data, news feeds, and other indicators to identify potential market trends and risks. By leveraging real-time market monitoring systems, organizations can detect market fluctuations and take proactive measures to mitigate market risk.

9.3 Predictive analytics for assessing market volatility Predicting market volatility is crucial for managing market risk. Predictive analytics can analyze historical market data and develop models to assess market volatility. By leveraging predictive analytics, organizations can gain insights into the potential volatility of the market and make informed decisions regarding risk exposure and portfolio management.

9.4 Machine learning for quantitative risk modeling Quantitative risk modeling involves developing models to assess the potential impact of market fluctuations on the performance of a portfolio. Machine learning algorithms can analyze historical market data and identify complex patterns that may indicate potential risks. By leveraging machine learning for quantitative risk modeling, organizations can make more accurate assessments of market risk and develop effective risk management strategies.

10. Regulatory Compliance and Reporting

Regulatory compliance and reporting are critical components of risk management in the financial industry. AI technologies offer innovative solutions for automating compliance monitoring, regulatory reporting, and risk-based compliance decision-making.

10.1 AI automation for regulatory reporting Regulatory reporting involves submitting timely and accurate reports to regulatory authorities. AI technologies can automate the process of regulatory reporting by analyzing data, generating reports, and ensuring compliance with regulatory requirements. By leveraging AI automation, organizations can streamline the regulatory reporting process, reduce errors, and ensure compliance with regulatory standards.

10.2 Natural language processing for compliance monitoring Natural language processing (NLP) can be used to automate compliance monitoring by analyzing regulatory documents and extracting relevant information. By leveraging NLP, organizations can analyze regulatory documents in real-time and identify potential compliance risks. NLP-powered compliance monitoring systems can provide accurate and timely insights into regulatory compliance, enabling organizations to take proactive measures to mitigate compliance risks.

10.3 Seamless integration of AI technologies for compliance AI technologies can be seamlessly integrated into existing compliance workflows and systems, enabling organizations to automate compliance processes. By integrating AI technologies, organizations can improve the efficiency of compliance management, reduce manual intervention, and ensure compliance with regulatory requirements. Seamless integration of AI technologies can help organizations keep pace with rapidly evolving regulatory landscapes and enhance their overall compliance capabilities.

10.4 Risk-based compliance decision-making using AI Risk-based compliance decision-making involves assessing the potential compliance risks associated with different activities and making informed decisions regarding compliance priorities. AI technologies can analyze large amounts of data and develop risk-based compliance models. By leveraging AI-powered risk-based compliance models, organizations can prioritize compliance efforts and allocate resources effectively to mitigate compliance risks.

In conclusion, the latest AI innovations in financial risk assessment offer unprecedented opportunities for organizations to better understand and manage risks. By leveraging machine learning algorithms, automation, and advanced analytics, organizations can identify potential risks, prevent fraud, ensure regulatory compliance, and optimize portfolio performance. These AI-powered tools and techniques enable organizations to make more informed decisions, improve efficiency, and enhance risk management capabilities in an increasingly complex and volatile business environment. As AI continues to evolve, financial institutions have the opportunity to stay ahead of emerging risks and drive better outcomes for their stakeholders.

Comparative Analysis Of Machine Learning Algorithms

Comparative analysis of machine learning algorithms. Learn about their strengths, weaknesses, and suitability for various applications. Empower your decision-making with this informative article.

In the fast-paced world of data-driven decision making, the selection of an optimal machine learning algorithm is crucial. To this end, a comparative analysis of machine learning algorithms has been conducted to assess their performance and suitability across various applications. This article presents a concise summary of the findings, providing insights into the strengths and limitations of different algorithms, empowering practitioners to make informed choices in their pursuit of effective predictive models.

Introduction

Machine learning algorithms have become an essential tool in the field of data analysis and decision-making. These algorithms enable computers to learn and make predictions or decisions without being explicitly programmed. With the increasing complexity of datasets and the need for accurate predictions, it has become crucial to compare and evaluate different machine learning algorithms. This article aims to provide a comprehensive overview of various machine learning algorithms and their comparative analysis.

Background of Machine Learning Algorithms

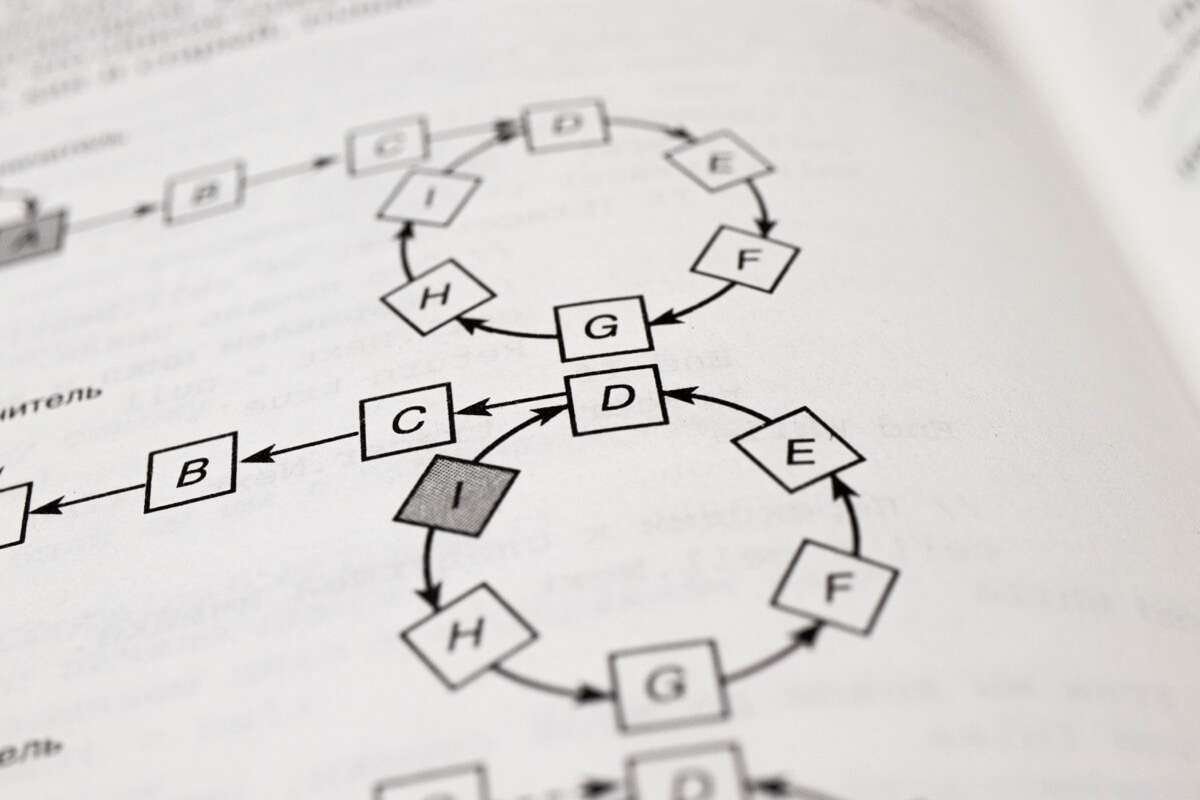

Machine learning algorithms are designed to enable computers to learn from and make predictions or decisions based on data. These algorithms can be broadly categorized into supervised, unsupervised, and reinforcement learning algorithms.

In supervised learning, models are trained on labeled data, where the desired output is known. The goal is to learn a mapping function from input features to output labels. Decision trees, random forest, support vector machines (SVM), naive bayes, and k-nearest neighbors (KNN) are some of the commonly used supervised learning algorithms.

Unsupervised learning, on the other hand, deals with unlabeled data. The task is to discover the underlying structure or patterns in the data. K-means clustering, hierarchical clustering, principal component analysis (PCA), and Gaussian mixture models (GMM) are popular unsupervised learning algorithms.

Reinforcement learning involves an agent interacting with an environment and learning from the feedback or rewards received. The agent makes a sequence of decisions in order to maximize the cumulative rewards. Q-learning, deep Q-networks (DQN), and actor-critic methods are widely used reinforcement learning algorithms.

Importance of Comparative Analysis

Comparative analysis of machine learning algorithms plays a vital role in selecting the most suitable algorithms for a given task. It helps in understanding the strengths and weaknesses of different algorithms, enabling data scientists to make informed decisions.

By comparing the performance of various algorithms, one can identify the algorithm that best fits the problem at hand. It allows for a better understanding of the trade-offs between different algorithms, considering factors such as accuracy, computational complexity, interpretability, and robustness. Comparative analysis also helps in identifying the algorithm’s suitability for real-world applications.

In addition, comparative analysis aids in the identification of areas where improvement is needed for specific algorithms. It provides valuable insights into the limitations and advantages of each algorithm, facilitating future research in the field of machine learning.

Supervised Learning Algorithms

Decision Trees

Decision trees are a popular supervised learning algorithm that can be used for both classification and regression tasks. They create a flowchart-like structure where each internal node represents a feature, each branch represents a possible outcome, and each leaf node represents a predicted label. Decision trees are easy to interpret and can handle both categorical and numerical data.

Random Forest

Random forest is an ensemble learning algorithm that combines multiple decision trees to make predictions. Each decision tree is trained on a random subset of features and data samples. The final prediction is obtained by aggregating the predictions of all the decision trees. Random forest improves the accuracy and reduces overfitting compared to a single decision tree.

Support Vector Machines (SVM)

Support Vector Machines are a powerful supervised learning algorithm used for classification and regression tasks. SVMs aim to find the optimal hyperplane that maximally separates the classes in the feature space. They can handle non-linear decision boundaries by using kernel functions. SVMs are effective for high-dimensional data and can handle outliers well.

Naive Bayes

Naive Bayes is a probabilistic classifier that uses Bayes’ theorem with the assumption of independence between features. It is a simple and computationally efficient algorithm that performs well in text classification and spam filtering tasks. Naive Bayes assumes that the presence of a particular feature in a class is independent of the presence of other features.

K-Nearest Neighbors (KNN)

K-Nearest Neighbors is a non-parametric supervised learning algorithm used for classification and regression tasks. The algorithm classifies new data points by finding the majority class among its k-nearest neighbors in the feature space. KNN is simple to understand and implement but can be computationally expensive for large datasets.

Unsupervised Learning Algorithms

K-Means Clustering

K-means clustering is a popular unsupervised learning algorithm used for clustering analysis. The algorithm aims to partition a dataset into k clusters by minimizing the sum of squared distances between data points and their nearest cluster centroid. K-means clustering is simple to implement and efficient for large datasets.

Hierarchical Clustering

Hierarchical clustering is an unsupervised learning algorithm that builds a hierarchy of clusters. It starts with each data point as a separate cluster and merges the closest clusters iteratively until all data points belong to a single cluster. Hierarchical clustering can produce a dendrogram that visualizes the clustering structure.

Principal Component Analysis (PCA)

Principal Component Analysis is a dimensionality reduction technique used in unsupervised learning. It transforms a high-dimensional dataset into a lower-dimensional space while retaining as much information as possible. PCA finds linear combinations of the original features called principal components, which capture the maximum variance in the data.

Gaussian Mixture Models (GMM)

Gaussian Mixture Models are probabilistic models used for density estimation and clustering analysis. GMM assumes that the data is generated from a mixture of Gaussian distributions. The algorithm estimates the parameters of these distributions to fit the data. GMM can handle complex distributions and has applications in image segmentation and anomaly detection.

Reinforcement Learning Algorithms

Q-Learning

Q-Learning is a model-free reinforcement learning algorithm used for making optimal decisions in Markov Decision Processes (MDPs). It learns an optimal action-value function, also known as a Q-function, through trial and error. Q-Learning is known for its simplicity and ability to handle large state spaces.

Deep Q-Networks (DQN)

Deep Q-Networks combine Q-Learning with deep neural networks to solve complex reinforcement learning problems. The algorithm uses a deep neural network as a function approximator to approximate the Q-function. DQN has achieved significant breakthroughs in challenging tasks, such as playing Atari games.

Actor-Critic Methods

Actor-Critic methods are reinforcement learning algorithms that use separate actor and critic networks. The actor network selects actions based on the current policy, while the critic network evaluates the actions and provides feedback. Actor-Critic methods strike a balance between exploration and exploitation and have proven effective in continuous control tasks.

Comparative Analysis Framework

Comparative analysis of machine learning algorithms requires a systematic framework to evaluate their performance. The following components are crucial for conducting a comprehensive comparative analysis:

Evaluation Metrics

Evaluation metrics quantify the performance of a machine learning algorithm. Accuracy, precision, recall, and F1-score are commonly used metrics for supervised learning. Cluster quality, silhouette coefficient, adjusted Rand index, and inertia are popular metrics for unsupervised learning. Average reward, convergence speed, and exploration-exploitation tradeoff are relevant metrics for reinforcement learning.

Data Preprocessing

Data preprocessing involves preparing the dataset for analysis. It includes steps such as removing duplicates, handling missing values, scaling features, and encoding categorical variables. Consistent and appropriate data preprocessing is crucial for fair comparison between algorithms.

Model Selection

Model selection involves choosing the best machine learning algorithm for a specific task. It requires considering the algorithm’s performance, complexity, interpretability, and robustness. Cross-validation and grid search techniques can aid in model selection.

Hyperparameter Tuning

Hyperparameters are the settings or configurations of an algorithm that need to be manually specified. Hyperparameter tuning involves selecting the optimal combination of hyperparameters to maximize the algorithm’s performance. Techniques like grid search, random search, and Bayesian optimization can be used for hyperparameter tuning.

Supervised Learning Performance Comparison

Comparing the performance of supervised learning algorithms can provide insights into their suitability for different tasks. The following performance metrics are commonly used for comparison:

Accuracy

Accuracy measures the proportion of correctly classified instances out of the total instances. It is a widely used metric for classification tasks. A higher accuracy indicates a better performing algorithm.

Precision

Precision measures the proportion of true positive predictions out of all positive predictions. It represents the algorithm’s ability to avoid false positive predictions. A higher precision indicates a lower rate of false positives.

Recall

Recall measures the proportion of true positive predictions out of all actual positive instances. It represents the algorithm’s ability to avoid false negative predictions. A higher recall indicates a lower rate of false negatives.

F1-Score

The F1-score is the harmonic mean of precision and recall. It provides a balanced measure of an algorithm’s performance, taking into account both false positives and false negatives. A higher F1-score indicates a better trade-off between precision and recall.

Unsupervised Learning Performance Comparison

Comparing the performance of unsupervised learning algorithms can help identify their effectiveness in clustering and dimensionality reduction tasks. The following performance metrics are commonly used:

Cluster Quality

Cluster quality measures how well a clustering algorithm groups similar instances together. It can be evaluated using metrics such as the Rand index or Jaccard coefficient.

Silhouette Coefficient

The Silhouette coefficient measures the average cohesion and separation of instances within a cluster. It ranges from -1 to 1, with higher values indicating better clustering.

Adjusted Rand Index (ARI)

The Adjusted Rand Index measures the similarity between the true cluster assignments and the ones produced by a clustering algorithm. It adjusts for chance agreement and ranges from -1 to 1, with higher values indicating better clustering.

Inertia

Inertia measures the compactness of clusters generated by a clustering algorithm. It is the sum of squared distances from each instance to its nearest cluster centroid. Lower inertia indicates better clustering.

Reinforcement Learning Performance Comparison

Comparing the performance of reinforcement learning algorithms can shed light on their ability to learn optimal policies. The following performance metrics are commonly used:

Average Reward

Average reward measures the average amount of reward received by an agent over a period of time. A higher average reward indicates better performance.

Convergence Speed

Convergence speed measures how quickly an algorithm learns an optimal policy. Faster convergence speed is desirable as it reduces the time required to train the agent.

Exploration vs. Exploitation Tradeoff

Exploration vs. Exploitation tradeoff refers to the balance between exploring new actions and exploiting the known actions that yield high rewards. An algorithm that strikes a good balance between exploration and exploitation is considered better.

Real-World Applications Comparison

Comparative analysis of machine learning algorithms is crucial for identifying their suitability for real-world applications. Here are some application areas and the algorithms commonly used in them:

Image Recognition

Image recognition algorithms, such as convolutional neural networks (CNN), are widely used for tasks like object detection, image classification, and facial recognition.

Natural Language Processing

Natural Language Processing (NLP) algorithms, including recurrent neural networks (RNN) and transformer models, are used for tasks such as sentiment analysis, text classification, and machine translation.

Anomaly Detection

Anomaly detection algorithms, such as isolation forests and one-class SVM, are employed to detect unusual patterns or outliers in datasets. They find applications in fraud detection, network intrusion detection, and fault diagnosis.

Recommendation Systems

Recommendation systems utilize collaborative filtering, matrix factorization, and neural networks to provide personalized recommendations to users. These algorithms are employed in e-commerce, streaming platforms, and content recommendation.

Conclusion

In conclusion, comparative analysis of machine learning algorithms is a crucial step in selecting the most suitable algorithm for a given task. This article provided a comprehensive overview of various machine learning algorithms, including supervised, unsupervised, and reinforcement learning algorithms. We discussed their background, importance, and performance metrics. We also explored the comparative analysis framework, including evaluation metrics, data preprocessing, model selection, and hyperparameter tuning. Lastly, we highlighted real-world applications where these algorithms find utility. By conducting a comprehensive comparative analysis, data scientists can make informed decisions, optimize performance, and drive advancements in the field of machine learning.

Case Studies On AI In Finance For Fraud Prevention

Discover how artificial intelligence is being effectively used in the finance industry to detect and prevent fraud through compelling case studies. Explore the transformative potential of this cutting-edge technology.

In the ever-evolving landscape of finance, fraud prevention has become an increasingly critical concern. The convergence of artificial intelligence (AI) and finance has opened up new avenues for combating fraudulent activities, revolutionizing the way institutions protect themselves and their customers. Through a series of compelling case studies, this article explores how AI is being effectively utilized in the finance industry to detect and prevent fraud, shedding light on the transformative potential of this cutting-edge technology.

1. Introduction

Fraud prevention in the finance industry has become a paramount concern for financial institutions worldwide. With the increasing sophistication of fraudulent activities, traditional manual methods of detecting and preventing fraud have proven to be insufficient. As a result, financial institutions are turning to Artificial Intelligence (AI) technology to enhance their fraud prevention strategies. In this article, we will delve into the role of AI in finance and its significance in preventing fraud. We will also explore several case studies that demonstrate the successful implementation of AI for fraud prevention in different financial institutions, highlighting the outcomes, challenges faced, and lessons learned.

2. Understanding AI in Finance

2.1 Key Concepts of AI in Finance

To fully comprehend the significance of AI in fraud prevention, it is essential to understand the key concepts of AI in finance. Artificial Intelligence refers to the development of computer systems that can perform tasks that usually require human intelligence, such as speech recognition, decision-making, and problem-solving. In the finance industry, AI is applied to analyze vast amounts of data, detect patterns, and make predictions or recommendations. Machine Learning (ML) and Natural Language Processing (NLP) are critical components of AI that enable the automation of fraud prevention processes.

2.2 Benefits of AI in Finance

AI offers numerous benefits to the finance industry in terms of fraud prevention. Firstly, AI can process large volumes of data with greater accuracy and speed compared to manual methods. This allows financial institutions to identify potential fraud cases in real-time, minimizing losses. Secondly, AI technology can continuously learn and adapt to new fraud patterns, ensuring proactive fraud detection and prevention. Moreover, AI can reduce false positives, enabling risk managers to focus on genuine threats. Lastly, AI-powered systems can provide valuable insights and data analytics that can aid in developing robust fraud prevention strategies.

2.3 Challenges in Implementing AI in Finance

While the benefits of AI in finance are significant, several challenges must be addressed for successful implementation. One major challenge is ensuring data privacy and security. Financial institutions must protect sensitive customer data while utilizing AI systems to detect and prevent fraud. Additionally, integrating AI technology into existing infrastructure can be complex and require significant investments in terms of both time and resources. Services like data cleaning and integration, algorithm development, and staff training may be necessary. Lastly, gaining regulatory approval and addressing compliance issues is crucial to ensure the ethical and responsible use of AI in finance.

3. Significance of Fraud Prevention in Finance

3.1 The Need for Effective Fraud Prevention in Finance

Fraud poses a significant threat to financial institutions and their customers. Effective fraud prevention is necessary to safeguard the integrity of financial systems, protect customer assets, and maintain public trust. As the financial industry embraces digitalization, fraudsters have become increasingly sophisticated, exploiting vulnerabilities in transaction processes, payment systems, and customer accounts. Therefore, financial institutions must adopt advanced technologies like AI to stay ahead of fraudsters and prevent financial crimes.

3.2 Impact of Fraud on Financial Institutions

The impact of fraud on financial institutions can be devastating. Apart from monetary losses, fraud can lead to reputational damage, erosion of customer trust, and legal consequences. Financial institutions may face costly litigations, penalties, and regulatory scrutiny if they fail to adequately prevent and detect fraud. Additionally, fraudulent activities can disrupt business operations, compromise customer data, and undermine the overall stability of the financial system. Therefore, investing in robust fraud prevention measures, including AI, is crucial to mitigate these risks.

3.3 Role of AI in Enhancing Fraud Prevention in Finance

AI plays a pivotal role in enhancing fraud prevention measures in the finance industry. By leveraging AI technologies such as machine learning, predictive analytics, and anomaly detection, financial institutions can achieve improved accuracy and efficiency in fraud detection. AI-powered systems can identify subtle fraud patterns that may be difficult for human analysts to detect. Furthermore, AI can automate routine fraud prevention tasks, allowing fraud analysts to focus on complex cases and investigations. These advancements enable financial institutions to respond swiftly to emerging fraud threats while minimizing false positives and providing a better customer experience.

4. Case Study 1: XYZ Bank

4.1 Overview of the Case Study

XYZ Bank, a prominent international financial institution, faced significant challenges in detecting and preventing fraud within its operations. The bank’s existing manual processes were overwhelmed by the sheer volume of transactions, leading to delays in fraud detection and excessive false positives. As a result, XYZ Bank decided to implement AI technology to enhance its fraud prevention capabilities.

4.2 Implementation of AI for Fraud Prevention at XYZ Bank

XYZ Bank implemented an AI-powered fraud prevention system that integrated with its existing infrastructure. The system utilized machine learning algorithms to analyze vast amounts of transaction data, customer profiles, and other relevant parameters. By continuously learning from historical data, the system identified patterns and anomalies associated with fraudulent activities.

4.3 Results and Achievements

The implementation of AI for fraud prevention at XYZ Bank yielded remarkable results. The AI system significantly reduced the time taken to detect and prevent fraud, improving the bank’s response time. Moreover, false positives were minimized, allowing the bank’s fraud analysts to focus on genuine threats. The AI system also provided valuable insights and data analytics, enabling XYZ Bank to refine its fraud prevention strategies.

4.4 Lessons Learned

XYZ Bank learned several valuable lessons throughout the implementation of AI for fraud prevention. It became apparent that data quality and integration were critical for the success of an AI-powered system. Additionally, user acceptance and training played a crucial role in ensuring the adoption and effective utilization of the AI system among staff. Furthermore, regular fine-tuning of algorithms and continual monitoring of system performance were necessary to maintain optimal accuracy and efficiency.

5. Case Study 2: ABC Financial Services

5.1 Overview of the Case Study

ABC Financial Services, a leading provider of financial products, encountered significant challenges in combating fraud within its operations. The company was determined to leverage AI technology to enhance its fraud prevention measures and maintain its reputation as a trusted financial services provider.

5.2 Use of AI Technology for Fraud Prevention at ABC Financial Services

ABC Financial Services implemented an AI-based fraud prevention solution that utilized machine learning algorithms and natural language processing capabilities. The AI system analyzed customer data, transaction patterns, and external data sources in real-time to detect fraudulent activities. By continuously learning and adapting to new fraud patterns, the AI system improved detection accuracy and minimized false positives.

5.3 Outcomes and Benefits