Discover expert opinions on recent advancements in Natural Language Processing (NLP) and their potential implications across industries. Gain valuable insights on the current state, challenges, and future trends in NLP. Continue reading “Experts Opinions On Recent NLP Advancements”

Technical Review Of NLP Advancements In 2023

Discover the latest advancements in Natural Language Processing (NLP) in 2023. This technical review explores enhanced language models, improved text classification, multi-modal NLP, and deep learning architectures. Learn how NLP is revolutionizing communication and transforming various industries.

In this article, you will be provided with a comprehensive overview of the cutting-edge advancements in Natural Language Processing (NLP) that have emerged in 2023. As language technology continues to evolve at a rapid pace, it has significantly impacted various industries and transformed the way we interact with machines. From the introduction of more sophisticated language models to the advancement of sentiment analysis techniques, this technical review will delve into the latest breakthroughs and their implications for businesses and individuals alike. Get ready to explore the potential of NLP in revolutionizing communication and enhancing the efficiency of daily tasks.

Introduction

Natural Language Processing (NLP) is a subfield of artificial intelligence (AI) that focuses on the interaction between humans and computers using natural language. It involves the development and application of computational models to understand, analyze, and generate human language. In recent years, NLP has experienced significant advancements, driven by enhanced language models, improved text classification techniques, multi-modal NLP, and deep learning architectures. These advancements have led to a wide range of applications, including natural language understanding, machine translation, sentiment analysis, and question answering systems. However, NLP also faces challenges and limitations, such as data privacy concerns, bias and fairness issues, and the need for interpretable NLP models. Looking towards the future, continued advancements in deep learning, ethical considerations, domain-specific NLP, and the development of human-like conversational agents are expected to shape the future of NLP.

Overview of NLP

Definition of NLP

Natural Language Processing (NLP) is a branch of AI that focuses on the interaction between computers and human language. It involves the development of algorithms and models that enable computers to understand, analyze, and generate human language in a way that is meaningful and contextually relevant.

History of NLP

The field of NLP originated in the 1950s with the development of machine translation systems and early language processing techniques. Over the years, NLP has evolved through various stages, including rule-based systems, statistical models, and more recently, deep learning approaches. The advancements in computational power and the availability of large-scale datasets have greatly contributed to the progress of NLP, allowing researchers to develop more sophisticated models with improved performance.

Advancements in NLP

Enhanced Language Models

One of the major advancements in NLP has been the development of enhanced language models, such as OpenAI’s GPT-3. These models are trained on massive amounts of text data and are capable of generating human-like responses and understanding complex language structures. Enhanced language models have revolutionized various applications, including text generation, chatbots, and dialogue systems.

Improved Text Classification Techniques

Text classification is a fundamental task in NLP, and advancements in this area have greatly improved the accuracy and efficiency of classifying text data. Techniques such as deep learning, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have shown remarkable performance in tasks such as sentiment analysis, spam detection, and document categorization.

Multi-modal NLP

Multi-modal NLP involves the integration of different modalities, such as text, images, and audio, to improve the understanding and generation of human language. This approach has gained significant attention in areas like image captioning, video summarization, and speech-to-text translation. By incorporating multiple modalities, NLP models can capture more nuanced information, leading to more accurate and contextually relevant results.

Deep Learning Architectures for NLP

Deep learning has played a crucial role in advancing NLP by enabling models to learn complex patterns and representations from raw text data. Architectures such as recurrent neural networks (RNNs), long short-term memory (LSTM), and transformers have demonstrated superior performance in tasks like machine translation, named entity recognition, and text summarization. Deep learning models have the ability to capture both local and global dependencies in text, allowing for more comprehensive language understanding.

Applications of NLP

Natural Language Understanding

Natural Language Understanding (NLU) refers to the ability of computers to comprehend and interpret human language. NLP techniques have been widely applied in areas such as voice assistants, virtual agents, and customer support chatbots. By understanding user queries and intents, NLU systems can provide more accurate and personalized responses, improving the overall user experience.

Machine Translation

Machine translation is the task of automatically translating text from one language to another. NLP advancements have greatly improved the performance of machine translation systems. Neural machine translation models, which utilize deep learning architectures, have shown significant progress in generating more accurate and fluent translations across various language pairs.

Sentiment Analysis

Sentiment analysis involves the identification and extraction of subjective information, such as opinions, emotions, and sentiments, from text data. This has numerous applications in areas such as market research, social media analysis, and customer feedback analysis. NLP techniques, particularly deep learning models, have greatly enhanced the accuracy and efficiency of sentiment analysis, enabling organizations to gain valuable insights from large volumes of textual data.

Question Answering Systems

Question answering systems aim to automatically provide relevant answers to user queries, often in the form of natural language responses. NLP advancements have powered the development of sophisticated question answering systems, such as IBM’s Watson and Google’s BERT. These systems utilize techniques like information retrieval, semantic representation, and deep learning to analyze and interpret user queries, extracting relevant information from large knowledge bases to generate accurate and contextual answers.

Challenges and Limitations

Data Privacy and Security Concerns

As NLP capabilities continue to grow, concerns regarding data privacy and security become increasingly important. NLP models often require access to large amounts of user data, which raises concerns about data protection and potential misuse of personal information. As such, ensuring robust data privacy measures and ethical considerations are vital for the responsible development and deployment of NLP systems.

Bias and Fairness Issues

Another challenge in NLP is the presence of bias in language data and models. NLP models are trained on large datasets, and if these datasets are biased, the models can learn and perpetuate biased behavior or discriminatory patterns. This can lead to unfair outcomes or reinforce existing biases in automated systems. Addressing bias and fairness issues in NLP models is crucial to ensure equitable and unbiased treatment of users and to foster inclusivity.

Interpretable NLP Models

Deep learning models used in NLP, such as transformers, are known for their impressive performance, but they often lack interpretability. Understanding why a model made a particular prediction or inference is essential for building trust and ensuring transparency in NLP systems. Developing interpretable NLP models is an ongoing challenge that researchers are actively working on, aiming to strike a balance between performance and interpretability.

The Future of NLP

Continued Advancements in Deep Learning

The future of NLP is expected to witness continued advancements in deep learning techniques. Researchers will strive to develop more advanced architectures, fine-tune models on larger datasets, and explore novel training techniques to further improve the performance of NLP systems. This will enable NLP models to understand and generate language more accurately, leading to enhanced user experiences and improved application outcomes.

Ethical Considerations

Ethical considerations will play a pivotal role in the future development and deployment of NLP systems. Addressing concerns related to data privacy, bias, and fairness will be crucial to ensure responsible and ethical use of NLP technologies. Frameworks and guidelines for ethical NLP practices will need to be developed and followed by researchers, developers, and organizations to promote transparency and safeguard user interests.

Domain-specific NLP

NLP advancements are likely to focus on domain-specific applications, where models are tailored to specific fields or industries. By understanding the nuances and specific language patterns within a domain, NLP models can provide more accurate and contextually relevant results. For example, domain-specific NLP models can assist in medical diagnoses, legal research, or financial analysis, offering specialized support and improving overall decision-making processes.

Human-like Conversational Agents

The development of human-like conversational agents, often referred to as chatbots or virtual assistants, will continue to be a major area of focus in NLP research. These agents aim to provide natural and seamless interactions with users, simulating human-like conversation. Advancements in conversational agents will involve improving language understanding, response generation, and context awareness, enabling more engaging and effective human-computer interactions.

Conclusion

The advancements in NLP have revolutionized the field of artificial intelligence by enabling computers to understand, analyze, and generate human language. Enhanced language models, improved text classification techniques, multi-modal NLP, and deep learning architectures have propelled NLP applications in various domains. However, challenges related to data privacy, bias, fairness, and interpretability need to be addressed for responsible and ethical development and deployment of NLP systems. Looking towards the future, continued advancements in deep learning, ethical considerations, domain-specific NLP, and the development of human-like conversational agents will shape the future of NLP, promising more accurate, contextually relevant, and user-centric language processing capabilities.

Technical Review Of Machine Learning Algorithm Advancements In 2023

Stay updated with the latest advancements in machine learning algorithms for 2023. Explore cutting-edge techniques and their potential impact on industries.

In this article, you will be provided with a comprehensive overview of the latest advancements in machine learning algorithms for the year 2023. As technology continues to evolve at an unprecedented rate, it is crucial for professionals in the field to stay up-to-date with the latest developments. This technical review will explore the cutting-edge techniques and methodologies being applied in machine learning, highlighting their potential impact on various industries. With a focus on accuracy, efficiency, and versatility, this article aims to equip you with the knowledge and insights needed to navigate the ever-expanding landscape of machine learning algorithms.

1. Introduction

1.1 Overview of Machine Learning Algorithm Advancements

In recent years, machine learning has seen significant advancements, revolutionizing various industries and driving innovation across multiple domains. Machine learning algorithms have evolved rapidly, harnessing the power of data and computational resources to solve complex problems. These advancements have led to improved accuracy, faster processing speeds, and increased scalability of machine learning models. In this technical review, we will explore the latest advancements in machine learning algorithms in 2023, focusing on key areas such as reinforcement learning, deep learning, transfer learning, generative adversarial networks (GANs), explainable artificial intelligence (XAI), natural language processing (NLP), time series analysis, semi-supervised learning, and ensemble learning.

1.2 Importance of Technical Review in 2023

As machine learning continues to advance at a rapid pace, it is crucial for researchers, practitioners, and industry professionals to stay updated with the latest developments in the field. A comprehensive technical review provides invaluable insights into the state-of-the-art algorithms, architectures, and techniques, enabling individuals to make informed decisions regarding model selection, implementation, and optimization. Moreover, understanding the advancements in machine learning algorithms can help organizations leverage these technologies effectively and stay ahead of the competition. This review aims to provide a comprehensive analysis of the advancements in various machine learning techniques, aiding researchers and practitioners in their quest for building robust and high-performing models.

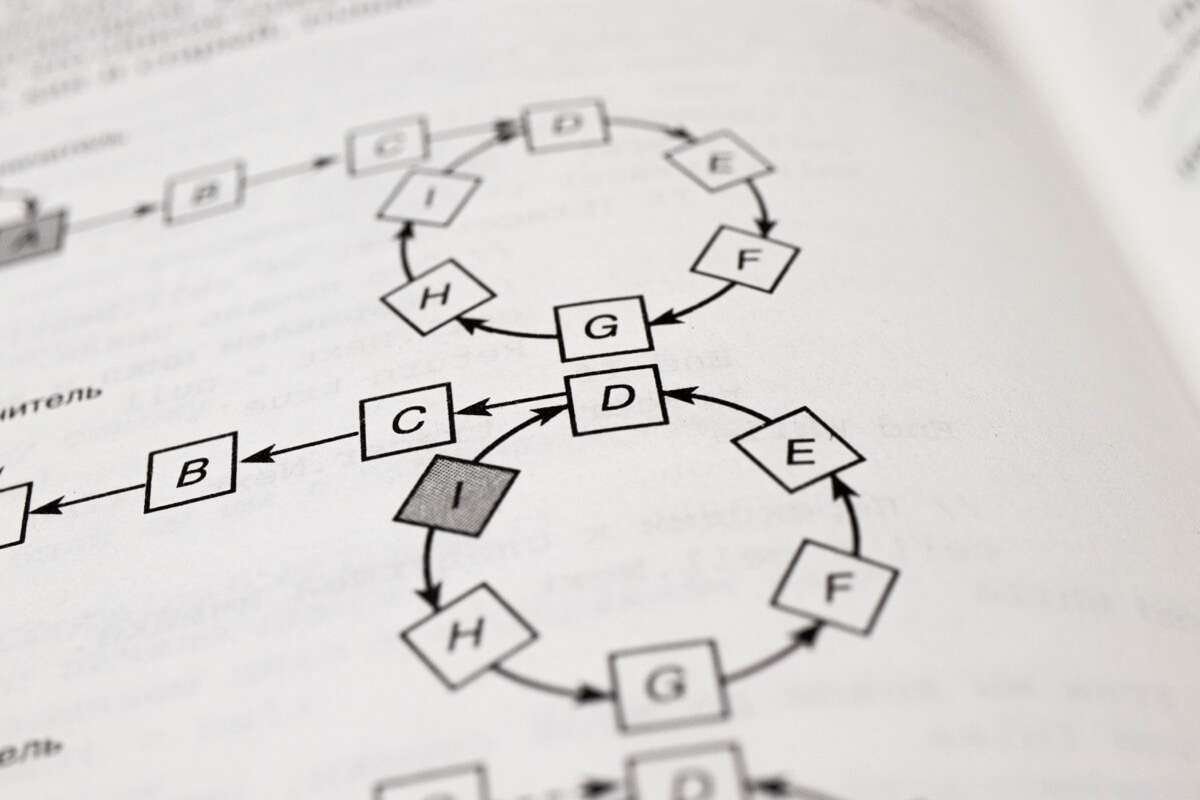

2. Reinforcement Learning

2.1 State of Reinforcement Learning Algorithms in 2023

Reinforcement learning, a subfield of machine learning, focuses on decision-making in dynamic and uncertain environments. In 2023, reinforcement learning algorithms have witnessed significant progress in terms of both performance and versatility. Deep reinforcement learning, combining reinforcement learning with deep neural networks, has been a particularly promising area. Models such as Deep Q-Networks (DQNs) and Proximal Policy Optimization (PPO) have achieved remarkable results in complex tasks such as game playing, robotics, and autonomous systems. Additionally, advancements in exploration and exploitation strategies, value function approximation, and model-based reinforcement learning have further enhanced the capabilities of reinforcement learning algorithms.

2.2 Advancements in Reinforcement Learning Techniques

Researchers have focused on improving the sample efficiency and stability of reinforcement learning algorithms in 2023. One significant advancement is the incorporation of off-policy learning techniques like distributional reinforcement learning and importance-weighted regression. These approaches allow models to learn from diverse experiences and improve the quality of policy updates. Furthermore, there have been developments in meta-reinforcement learning, which enables agents to quickly adapt to new tasks by leveraging prior knowledge or experience. Techniques such as model-agnostic meta-learning (MAML) and recurrent model-agnostic meta-learning (Reptile) have shown promising results in few-shot learning scenarios.

2.3 Comparative Analysis of Reinforcement Learning Algorithms

A thorough comparative analysis of reinforcement learning algorithms can guide practitioners in selecting the most suitable approach for a given problem. Key factors to consider include sample efficiency, convergence speed, stability, and generalization capabilities. When evaluating algorithms, it is essential to consider benchmarks and evaluation criteria, such as performance on standard OpenAI Gym environments, Atari games, or complex robotics tasks. Comparative analysis should also take into account the computational requirements, scalability, and interpretability of different algorithms. Overall, a comprehensive understanding of the strengths and weaknesses of various reinforcement learning techniques is necessary for informed decision-making and successful application of these algorithms.

3. Deep Learning

3.1 State of Deep Learning Algorithms in 2023

Deep learning, a subset of machine learning, focuses on training neural networks with multiple hidden layers for extracting complex patterns and representations from data. In 2023, deep learning algorithms have achieved remarkable performance across diverse domains such as computer vision, natural language processing, and speech recognition. State-of-the-art deep learning models, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer models, have pushed the boundaries of accuracy and scalability. Advances in hardware, specifically graphics processing units (GPUs) and tensor processing units (TPUs), have accelerated the training and inference processes, enabling the deployment of deep learning models in real-world applications.

3.2 Advancements in Deep Learning Architectures

Deep learning architectures have evolved to address various challenges in model architecture, training, and optimization. One significant advancement is the development of attention mechanisms, such as self-attention and transformer models. These mechanisms have improved the performance of neural networks in tasks requiring long-range dependencies, such as machine translation and language understanding. Additionally, researchers have explored novel network architectures, including generative adversarial networks (GANs), variational autoencoders (VAEs), and capsule networks, leading to breakthroughs in image generation, unsupervised learning, and object recognition. Continual learning, which allows models to learn sequentially from non-stationary data, has also gained attention in the deep learning community.

3.3 Performance Evaluation of Deep Learning Algorithms

Performance evaluation of deep learning algorithms involves assessing key metrics such as accuracy, precision, recall, and F1 score on specific benchmarks or datasets. For computer vision tasks, benchmarks like ImageNet, COCO, and Pascal VOC provide standardized datasets for evaluating object detection, image classification, and semantic segmentation models. Natural language processing benchmarks such as GLUE, SQuAD, and WMT allow for evaluation of tasks like sentiment analysis, question answering, and machine translation. It is crucial to consider the computational requirements and model interpretability while evaluating deep learning algorithms. The identification of potential biases, robustness to adversarial attacks, and scalability in handling large-scale datasets are essential aspects of performance evaluation.

4. Transfer Learning

4.1 State of Transfer Learning Techniques in 2023

Transfer learning aims to leverage knowledge gained from one task or domain to improve learning and performance in another related task or domain. In 2023, transfer learning techniques have witnessed significant advancements, facilitating the transfer of knowledge across diverse domains such as computer vision, natural language processing, and audio processing. Pretrained models, such as those from the BERT (Bidirectional Encoder Representations from Transformers) family, have enabled fine-tuning on downstream tasks with minimal labeled data, improving efficiency and reducing the need for extensive training on specific tasks. Transfer learning has proved invaluable in scenarios with limited labeled data or when retraining deep learning models from scratch is infeasible.

4.2 Innovations in Transfer Learning Algorithms

Researchers have explored innovative approaches to further improve transfer learning algorithms in 2023. Adversarial learning, for instance, has been applied to mitigate the effects of dataset biases and improve the generalization capabilities of transfer learning models. Techniques such as domain adaptation and domain generalization aim to make models more robust to changes in input distribution, allowing them to perform well when deployed in different environments. Meta-learning approaches, including metric learning and model-agnostic meta-learning, have shown promise in adapting models quickly to new tasks with limited labeled data. These innovations in transfer learning algorithms have expanded the range of applications and improved the performance of models across domains.

4.3 Evaluation of Transfer Learning Models

To evaluate the effectiveness of transfer learning models, it is essential to consider various evaluation metrics depending on the specific task or domain. Evaluating transfer learning algorithms for computer vision tasks often involves using established benchmarks like ImageNet, COCO, or PASCAL VOC. These benchmarks allow for comparing the performance of models in object detection, image classification, and other computer vision tasks. For natural language processing tasks, datasets such as GLUE, SQuAD, or WMT can be used to assess the performance of transfer learning models in sentiment analysis, question answering, and machine translation, among others. It is crucial to evaluate both the improvement over baseline models and the computational requirements of transfer learning techniques to determine their suitability for real-world applications.

5. Generative Adversarial Networks (GANs)

5.1 Current Landscape of GANs in 2023

Generative Adversarial Networks (GANs) have gained significant attention in the field of machine learning due to their ability to generate realistic and high-quality data samples. In 2023, GANs have found applications in image synthesis, text generation, and data augmentation. They have revolutionized the field of computer vision by generating images that are indistinguishable from real images. GANs consist of two competing neural networks, a generator that generates artificial data samples, and a discriminator that distinguishes between real and generated samples. The generator and discriminator are trained iteratively, with the ultimate goal of the generator producing samples that are realistic and pass the discriminator’s scrutiny.

5.2 Recent Enhancements in GANs

Researchers have made significant enhancements to GANs in 2023, addressing various challenges such as mode collapse, training instability, and lack of diversity in generated samples. Techniques such as Wasserstein GAN (WGAN) and Spectral Normalization have improved the stability and convergence of GAN training. Conditional GANs (cGANs) have enabled the generation of conditional samples based on specific input conditions, allowing for controlled synthesis of data samples. Progressive GANs, on the other hand, have enabled the generation of high-resolution images by training the generator progressively on multiple resolutions. Additionally, various regularization techniques, such as spectral normalization and feature matching, have been employed to mitigate mode collapse and improve the diversity of generated samples.

5.3 Comparative Study of GAN Variants

A comparative study of different GAN variants is essential for understanding the strengths and weaknesses of each approach and selecting the most suitable variant for a specific task. Evaluation of GANs involves assessing the quality of generated samples, diversity, and semantic consistency. Metrics such as Fréchet Inception Distance (FID), Inception Score (IS), and Structural Similarity Index (SSIM) provide quantitative measures of sample quality. In addition to assessing generated samples, examining the stability of training, convergence speed, and computational efficiency is crucial. Comparative studies can help identify the most effective GAN variants for various applications, including image synthesis, text generation, and data augmentation.

6. Explainable Artificial Intelligence (XAI)

6.1 Advancements in XAI Techniques

Explainable Artificial Intelligence (XAI) addresses the black-box nature of complex machine learning models, enabling humans to understand and interpret the decisions made by these models. In 2023, advancements in XAI techniques have focused on providing transparent and interpretable explanations for machine learning predictions. Techniques such as rule-based models, feature importance analysis, and local interpretability methods, such as Lime and SHAP, have allowed users to gain insights into the decision-making process of complex models. Additionally, attention mechanisms and saliency maps have provided visual explanations, enabling users to understand the parts of input data that contribute most to the model’s predictions.

6.2 State-of-the-art XAI Algorithms in 2023

State-of-the-art XAI algorithms in 2023 have combined multiple interpretability techniques to provide comprehensive explanations for complex machine learning models. Methods such as Integrated Gradients, Layer-Wise Relevance Propagation (LRP), and Concept Activation Vectors (CAVs) integrate gradient-based techniques and attention mechanisms to generate interpretable explanations. Model-agnostic XAI approaches, such as LIME and SHAP, provide explanations that can be applied to a wide range of machine learning models. Furthermore, advancements in automatic and post-hoc fairness analysis techniques have facilitated the identification and mitigation of bias in AI models, enhancing the transparency and accountability of machine learning systems.

6.3 Understanding the Interpretability of ML Models

Understanding the interpretability of machine learning models is critical for ensuring their trustworthiness and adoption in sensitive domains such as healthcare, finance, and autonomous systems. Evaluating the interpretability of ML models involves examining metrics such as fidelity, stability, and global versus local interpretability. Fidelity measures how well model explanations align with model behavior, while stability assesses the consistency of explanations across different perturbations of input data. Furthermore, different evaluation methodologies, such as human subject studies and quantitative assessments, can be used to validate the effectiveness of XAI techniques. Understanding the interpretability of ML models helps address concerns related to bias, fairness, and transparency, fostering responsible and ethical deployment of AI systems.

7. Natural Language Processing (NLP)

7.1 Current State of NLP Algorithms in 2023

Natural Language Processing (NLP) involves the interaction between humans and computers using natural language. In 2023, NLP algorithms have made significant advancements in understanding and generating human language. State-of-the-art models such as BERT, GPT-3, and Transformer-XL have demonstrated exceptional performance in tasks such as sentiment analysis, machine translation, and question answering. These models leverage techniques such as attention mechanisms and self-supervised pre-training to capture contextual information and improve language understanding and generation capabilities. With the availability of large-scale pretrained language models and extensive datasets, NLP algorithms have achieved human-level performance in several language-related tasks.

7.2 Recent Developments in NLP Architectures

Recent developments in NLP architectures have focused on enhancing the generalization capabilities and efficiency of models. Techniques like Transformer, a self-attention mechanism-based architecture, have revolutionized NLP tasks by capturing long-range dependencies and improving the quality of language representations. Transfer learning approaches, such as fine-tuning pretrained models, have enabled the application of NLP models to downstream tasks with limited annotated data. The development of unsupervised and self-supervised learning algorithms, including masked language modeling and next sentence prediction, has provided effective ways of pretraining language models without relying on human-annotated labels. Furthermore, advancements in neural machine translation and context-aware language generation have led to significant improvements in language understanding and generation tasks.

7.3 Analysis of NLP Techniques for Various Applications

NLP techniques have found numerous applications across different domains, from sentiment analysis and text classification to question answering and language translation. Evaluating the performance of NLP algorithms requires considering specific metrics tailored to each task. For sentiment analysis, accuracy, precision, recall, and F1 score are commonly used metrics. For machine translation, metrics such as BLEU (bilingual evaluation understudy) and ROUGE (recall-oriented understudy for Gisting evaluation) provide a measure of translation quality. Additionally, evaluating the efficiency and scalability of NLP models is crucial for real-world deployment. Understanding the strengths and limitations of NLP techniques enables practitioners to select the most suitable algorithms for specific applications and optimize their performance.

8. Time Series Analysis

8.1 State of Time Series Analysis Methods in 2023

Time series analysis involves studying and modeling data points collected over successive time intervals. In 2023, time series analysis methods have witnessed significant advancements, enabling accurate forecasting and modeling of time-dependent patterns. Techniques such as recurrent neural networks (RNNs), long short-term memory (LSTM), and attention-based models have excelled in capturing temporal dependencies and making accurate predictions. Additionally, advancements in terms of automated feature extraction, anomaly detection, and change point detection have improved the capabilities of time series analysis methods. With the increasing availability of time series data in various domains, these advancements have facilitated better decision-making and planning based on predictive insights.

8.2 Advancements in Time Series Forecasting Algorithms

Advancements in time series forecasting algorithms have focused on improving the accuracy and efficiency of predictions. Hybrid models, combining multiple forecasting techniques such as ARIMA, exponential smoothing, and machine learning algorithms, have gained popularity due to their ability to capture various aspects of time series patterns. Deep learning models like LSTM and transformer-based architectures have shown superior performance in analyzing complex and long-term dependencies in time series data. Ensembling techniques, such as stacking and boosting, have also enhanced the accuracy and robustness of time series forecasts by combining the predictions of multiple models. These advancements have empowered industries such as finance, supply chain management, and energy to make informed decisions based on accurate predictions.

8.3 Evaluation of Time Series Models

Evaluating the performance of time series models requires considering appropriate metrics that capture the predictive accuracy and reliability of the models. Commonly used metrics include mean absolute error (MAE), mean squared error (MSE), root mean squared error (RMSE), and mean absolute percentage error (MAPE). These metrics provide a measure of the deviation between predicted and actual values. When evaluating time series models, it is essential to consider the forecasting horizon, as some models may perform better for shorter-term forecasts, while others excel in long-term predictions. Furthermore, the computational requirements and scalability of time series models are crucial factors to consider when selecting and evaluating algorithms for real-world applications.

9. Semi-supervised Learning

9.1 Overview of Semi-supervised Learning Approaches

Semi-supervised learning leverages both labeled and unlabeled data to improve model performance in scenarios where obtaining labeled data is expensive or time-consuming. In 2023, semi-supervised learning approaches have gained attention due to their ability to make use of vast amounts of unlabeled data available in many domains. Techniques such as self-training, co-training, and generative models have shown promise in utilizing unlabeled data to enhance the performance of supervised models. By leveraging the information embedded in unlabeled data, semi-supervised learning can achieve better generalization and mitigate overfitting.

9.2 Recent Enhancements in Semi-supervised Algorithms

Recent advancements in semi-supervised learning algorithms have focused on improving the robustness and scalability of these approaches. Techniques such as consistency regularization and pseudo-labeling aim to enforce consistency between predictions made on unlabeled data samples and the predictions made on labeled samples, thereby reducing the reliance on labeled data and improving generalization capabilities. Generative models, such as variational autoencoders (VAEs) and generative adversarial networks (GANs), have been employed to learn useful representations from unlabeled data, enabling better performance on downstream tasks. Adversarial learning techniques and domain adaptation approaches have also been utilized to enhance semi-supervised learning in scenarios with domain shift or limited labeled data.

9.3 Performance Comparison of Semi-supervised Techniques

Comparing the performance of different semi-supervised learning techniques entails assessing metrics such as accuracy, precision, recall, and F1 score on specific datasets or benchmarks. Additionally, it is crucial to evaluate the robustness of semi-supervised algorithms to variations in the amount of labeled data and the quality of the labels. It is essential to consider the computational requirements and scalability of algorithms, as large-scale semi-supervised learning can be challenging due to increased memory and processing demands. Comparative performance analysis allows for the identification of the most effective semi-supervised techniques for specific application domains, where labeled data is limited, expensive, or difficult to obtain.

10. Ensemble Learning

10.1 State of Ensemble Learning Methods in 2023

Ensemble learning aims to improve the predictive performance and robustness of machine learning models by combining the predictions of multiple base models. In 2023, ensemble learning methods have demonstrated their effectiveness in various domains, including classification, regression, and anomaly detection. Techniques such as bagging, boosting, and stacking have been widely adopted to create diverse and accurate ensemble models. The diversity among base models can be achieved through techniques like bootstrapping, feature randomization, and algorithmic variations. Ensemble learning focuses on capturing the wisdom of the crowd, where the collective decisions of multiple models lead to improved accuracy and generalization.

10.2 Innovations in Ensemble Techniques

Researchers have made notable innovations in ensemble techniques in 2023, exploring novel ways to increase diversity and model performance. Diversity injection techniques, such as random subspace method and random patching, aim to enhance the diversity among base models by selecting random subsets of features or instances. Hybrid ensemble models combining different ensemble techniques, such as bagging and boosting, have been proposed to exploit the strengths of each approach and mitigate their limitations. Additionally, ensemble pruning techniques, such as stacked generalization and selective ensemble pruning, enable the creation of compact and accurate ensemble models, reducing the computational complexity without sacrificing performance.

10.3 Evaluation of Ensemble Models

The evaluation of ensemble models involves assessing multiple performance metrics, such as accuracy, precision, recall, and F1 score, on standard benchmark datasets or specific application domains. Comparative analysis against individual base models or other ensemble methods provides insights into the effectiveness and superiority of a given ensemble approach. Other evaluation criteria include model diversity, ensemble size, model fusion strategies, and computational efficiency. Ensemble models can mitigate overfitting, improve generalization, and enhance the robustness of predictions. Understanding the trade-offs between accuracy and computational complexity is essential for selecting and evaluating ensemble models in real-world scenarios.

In conclusion, the technical review of machine learning algorithm advancements in 2023 highlights the significant progress made in various subfields of machine learning. Reinforcement learning has witnessed advancements in techniques, algorithms, and comparative analysis, enabling the development of intelligent decision-making systems. Deep learning has revolutionized computer vision, natural language processing, and speech recognition, driven by innovative architectures and performance evaluation techniques. Transfer learning techniques have facilitated knowledge transfer across domains, enhancing model performance with limited labeled data. Generative adversarial networks (GANs) and explainable artificial intelligence (XAI) have transformed the landscape of data generation and model interpretability. Natural language processing (NLP) algorithms have achieved remarkable language understanding and generation capabilities. Time series analysis, semi-supervised learning, and ensemble learning have showcased advancements in forecasting, leveraging unlabeled data, and combining multiple models for improved accuracy and robustness. Understanding these advancements and their evaluation criteria empowers researchers, practitioners, and industry professionals to harness the full potential of machine learning algorithms in solving real-world problems.

Educational Resources For Understanding New Machine Learning Algorithms

Discover educational resources for understanding new machine learning algorithms. Find books, online courses, tutorials, research papers, websites, YouTube channels, online communities, and blogs to enhance your knowledge in this ever-expanding field. Gain a competitive edge in artificial intelligence.

In today’s rapidly evolving technological landscape, keeping abreast of new machine learning algorithms is crucial for professionals and enthusiasts alike. However, understanding these complex algorithms can be a daunting task without the right educational resources. Fortunately, there are numerous platforms, courses, and websites available that cater specifically to individuals seeking to enhance their knowledge of new machine learning algorithms. By utilizing these educational resources, you can navigate the intricate world of machine learning with confidence and gain a competitive edge in the ever-expanding field of artificial intelligence.

Books

Machine Learning: A Probabilistic Perspective

“Machine Learning: A Probabilistic Perspective” is a widely respected book that offers a comprehensive introduction to the field of machine learning. Written by Kevin Murphy, a renowned expert in the field, this book covers the fundamental concepts and techniques of machine learning, with a focus on probabilistic modeling. It provides a solid foundation for understanding the principles behind various machine learning algorithms and their applications.

Pattern Recognition and Machine Learning

“Pattern Recognition and Machine Learning” by Christopher Bishop is another highly recommended book for those looking to dive deeper into the world of machine learning. This book explores the relationship between pattern recognition, data analysis, and machine learning. It covers a wide range of topics, including Bayesian methods, neural networks, and support vector machines, and provides a comprehensive understanding of the underlying principles and algorithms of machine learning.

Deep Learning

For those interested in delving into the exciting realm of deep learning, “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville is a must-read. This book offers a comprehensive introduction to deep learning techniques and architectures, exploring topics such as convolutional neural networks, recurrent neural networks, and generative models. With its clear explanations and practical examples, this book serves as an invaluable resource for both beginners and experienced practitioners in the field.

Hands-On Machine Learning with Scikit-Learn and TensorFlow

“Hands-On Machine Learning with Scikit-Learn and TensorFlow” by Aurélien Géron is a practical guide that provides a hands-on approach to learning machine learning. It covers essential concepts and techniques using popular libraries like Scikit-Learn and TensorFlow. This book is filled with interactive examples and real-world projects, making it a great resource for those who prefer a more practical learning experience.

Online Courses

Coursera: Machine Learning by Andrew Ng

The Machine Learning course on Coursera, taught by Andrew Ng, is one of the most popular and highly recommended online courses for beginners. This course covers the fundamental concepts and techniques of machine learning, including linear regression, logistic regression, neural networks, and more. It provides a solid foundation for understanding and implementing various machine learning algorithms.

edX: Introduction to Artificial Intelligence and Machine Learning

The edX course “Introduction to Artificial Intelligence and Machine Learning” offers a comprehensive introduction to both AI and machine learning. This course covers various topics, including intelligent agents, search algorithms, reinforcement learning, and neural networks. It provides a broad overview of the field and allows learners to gain a solid understanding of the fundamental concepts and techniques.

Udemy: Machine Learning A-Z: Hands-On Python & R In Data Science

“Machine Learning A-Z: Hands-On Python & R In Data Science” on Udemy is a practical course that focuses on hands-on learning. This course covers a wide range of machine learning algorithms and techniques using both Python and R programming languages. It provides step-by-step guidance on implementing and applying machine learning algorithms to real-world problems.

DataCamp: Machine Learning with Python

DataCamp offers a comprehensive course on machine learning with Python. This course covers the key concepts and techniques of machine learning, including supervised and unsupervised learning, regression, classification, and clustering. It also provides hands-on coding exercises and projects to help learners gain practical experience.

Tutorials

Google AI: Machine Learning Crash Course

The machine learning crash course offered by Google AI is a concise and practical tutorial that provides an overview of machine learning concepts and techniques. It covers topics such as linear regression, logistic regression, neural networks, and more. This tutorial is designed to help learners quickly grasp the fundamentals of machine learning and apply them to real-world problems.

Kaggle: Machine Learning Tutorials

Kaggle offers a wide range of tutorials and resources for machine learning enthusiasts. These tutorials cover various topics, from beginner-level introductions to more advanced techniques. With Kaggle’s interactive platform, learners can practice their skills and participate in machine learning competitions to further enhance their understanding and knowledge.

Medium: Introductory Guides to Machine Learning Algorithms

Medium, a popular online publishing platform, hosts a plethora of introductory guides to machine learning algorithms. These guides provide in-depth explanations of various machine learning algorithms, their underlying principles, and their applications. They are written by experts in the field and serve as valuable resources for individuals looking to gain a deeper understanding of specific algorithms.

Towards Data Science: Machine Learning Explained

Towards Data Science, a leading online platform for data science and machine learning enthusiasts, features a wide range of articles and tutorials that explain machine learning concepts and techniques in a clear and accessible manner. These articles cover topics such as regression, classification, clustering, and deep learning, providing readers with comprehensive insights into the world of machine learning.

Research Papers

Deep Residual Learning for Image Recognition

The research paper “Deep Residual Learning for Image Recognition” by Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun introduces the concept of residual networks (ResNets), which revolutionized image recognition tasks. This paper explores the benefits of deep residual learning and presents a novel architecture that enables deeper and more accurate convolutional neural networks.

Generative Adversarial Networks

The research paper on “Generative Adversarial Networks” by Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio introduces the concept of generative adversarial networks (GANs). GANs have proven to be powerful tools for generating realistic synthetic data and have applications in various domains, including image generation and text synthesis.

Attention Is All You Need

The research paper “Attention Is All You Need” by Vaswani et al. presents the transformer model, an attention-based architecture that has revolutionized natural language processing. This paper demonstrates that the transformer model can achieve state-of-the-art results in machine translation tasks and shows the effectiveness of self-attention mechanisms in handling long-range dependencies.

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

The research paper on “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” by Devlin et al. introduces BERT, a language representation model that has significantly advanced the field of natural language understanding. BERT utilizes a bidirectional transformer architecture and pre-training techniques to create contextualized representations of words, resulting in state-of-the-art performance on various language understanding tasks.

Websites

TowardsDataScience.com

TowardsDataScience.com is a comprehensive online platform that features articles, tutorials, and resources on various topics related to data science and machine learning. With contributions from industry experts and practitioners, the platform offers insights into the latest advancements, best practices, and applications of machine learning.

KDnuggets.com

KDnuggets.com is a popular website that provides a wealth of resources and news on machine learning, artificial intelligence, data science, and big data. It offers a collection of tutorials, articles, datasets, and job postings, making it a valuable hub for machine learning enthusiasts and professionals.

MachineLearningMastery.com

MachineLearningMastery.com, run by Jason Brownlee, is a renowned resource for learning and mastering machine learning. The website offers tutorials, books, and courses on various topics, providing practical guidance and hands-on examples for learners at different levels of expertise.

Distill.pub

Distill.pub is an innovative and visually appealing online platform that focuses on explaining complex machine learning concepts through interactive articles. It combines the expertise of researchers, designers, and developers to deliver intuitive and engaging explanations of cutting-edge machine learning algorithms and techniques.

YouTube Channels

Sentdex: Machine Learning with Python

The Sentdex YouTube channel offers a wide range of video tutorials and guides on machine learning with Python. The channel covers topics such as data preprocessing, regression, classification, neural networks, and much more. With its clear explanations and practical examples, Sentdex provides an accessible learning resource for individuals interested in machine learning with Python.

Two Minute Papers: Machine Learning and AI Research

The Two Minute Papers YouTube channel provides concise summaries of recent research papers in the fields of machine learning and artificial intelligence. Hosted by Károly Zsolnai-Fehér, the channel breaks down complex research papers into easily digestible two-minute videos. It serves as a valuable resource for staying up-to-date with the latest advancements in the field.

Machine Learning TV: Lectures on Various Machine Learning Topics

Machine Learning TV is a channel that hosts lectures and talks from leading experts in the field of machine learning. From introductory lectures to more advanced topics, the channel covers a wide range of machine learning techniques and algorithms. It offers viewers the opportunity to learn from world-class educators and researchers in the comfort of their own homes.

MIT Technology Review: Exploring AI

The MIT Technology Review YouTube channel explores various topics related to AI, including machine learning, robotics, and ethical considerations. It features interviews, discussions, and explanatory videos that provide insights into the latest developments and applications of AI. This channel offers a blend of informative content and thought-provoking discussions from the renowned Massachusetts Institute of Technology.

Online Communities

Stack Overflow: Machine Learning Community

Stack Overflow, a popular question-and-answer platform for programmers, hosts a vibrant machine learning community. Here, individuals can seek answers to their questions, discuss challenges, and share insights related to machine learning. With a vast and active user base, this community provides a wealth of knowledge and support for learners and practitioners alike.

Reddit: r/MachineLearning

The subreddit r/MachineLearning is a bustling online community dedicated to all things machine learning. Users can engage in discussions, ask questions, and share interesting articles and resources related to the field. With its diverse user base and active moderation, this subreddit is an excellent platform for networking, learning, and staying up-to-date with the latest trends and developments in machine learning.

Cross Validated: Machine Learning Section

Cross Validated is a dedicated section of the popular question-and-answer website Stack Exchange. This section focuses specifically on statistical modeling, machine learning, and data analysis. Users can ask and answer questions, share insights, and participate in discussions related to machine learning. With its emphasis on statistical rigor, Cross Validated provides a valuable resource for individuals seeking in-depth understanding and discussion surrounding machine learning topics.

Kaggle: Machine Learning Discussion Forum

Kaggle’s machine learning discussion forum is a vibrant community where users can connect with fellow practitioners, share their machine learning projects, and discuss challenges and solutions. With a diverse user base consisting of data scientists, programmers, and enthusiasts, this forum provides a collaborative environment for learning, networking, and staying engaged in the machine learning community.

Blogs

Machine Learning Mastery by Jason Brownlee

Jason Brownlee’s blog, Machine Learning Mastery, provides a wealth of tutorials, articles, and resources on machine learning. With a focus on practical advice and hands-on implementation, this blog covers a wide range of topics, from the basics of machine learning to advanced techniques and algorithms. Jason Brownlee’s expertise and clear explanations make this blog an invaluable resource for individuals looking to advance their machine learning skills.

The Gradient by OpenAI

The Gradient is a blog platform run by OpenAI, a prominent research organization in the field of artificial intelligence. The blog features high-quality articles written by researchers and industry experts, covering topics ranging from machine learning advancements to ethical considerations. With its insightful analysis and thought-provoking content, The Gradient offers a unique perspective on the intersection of AI and society.

Sebastian Ruder’s NLP/ML blog

Sebastian Ruder’s NLP/ML blog is a valuable resource for those interested in natural language processing (NLP) and machine learning. Sebastian Ruder, a research scientist focusing on NLP, shares his expertise through informative and accessible articles on topics such as word embeddings, transfer learning, and attention mechanisms. This blog offers insights into cutting-edge NLP research and practical implementations.

Google AI Blog

The Google AI Blog provides a platform for Google researchers and engineers to share insights into their work and advancements in the field of artificial intelligence. This blog covers a wide range of topics, including machine learning, computer vision, natural language processing, and more. With contributions from industry experts, the Google AI Blog offers a valuable resource for understanding the latest developments and applications of AI.

Conferences and Workshops

NeurIPS – Conference on Neural Information Processing Systems

NeurIPS, the Conference on Neural Information Processing Systems, is one of the most prestigious conferences in the field of machine learning and AI. It brings together leading researchers, practitioners, and industry experts to present and discuss the latest advancements in the field. NeurIPS features a wide range of workshops, tutorials, and paper presentations, providing a platform for knowledge exchange and networking.

ICML – International Conference on Machine Learning

The International Conference on Machine Learning (ICML) is a prominent conference that showcases the latest research and advancements in the field of machine learning. ICML features high-quality paper presentations, workshops, and tutorials, covering a wide range of topics and techniques. Attending ICML provides an opportunity to learn from leading experts and gain insights into the cutting-edge developments in machine learning.

CVPR – Conference on Computer Vision and Pattern Recognition

CVPR, the Conference on Computer Vision and Pattern Recognition, focuses on computer vision and its intersection with machine learning. This conference attracts researchers, practitioners, and industry experts from around the world to share their insights and advancements in computer vision technologies. CVPR features paper presentations, workshops, and tutorials, making it an ideal platform for staying up-to-date with the latest trends in the field.

ACL – Association for Computational Linguistics

The Association for Computational Linguistics (ACL) hosts an annual conference that brings together researchers and practitioners in the field of natural language processing and computational linguistics. ACL features paper presentations, tutorials, and workshops that cover a wide range of topics, including machine learning applications in language understanding, sentiment analysis, and machine translation. Attending ACL provides an opportunity to learn from leading experts and stay informed about the latest advancements in the field.

Social Media Groups

LinkedIn: Machine Learning and Artificial Intelligence Professionals

The LinkedIn group “Machine Learning and Artificial Intelligence Professionals” serves as a platform for professionals, researchers, and enthusiasts to connect, share knowledge, and engage in discussions related to machine learning and AI. With its large and diverse community, this group offers valuable networking opportunities and access to the latest news, job postings, and industry insights.

Facebook: Machine Learning and Deep Learning Community

The Facebook group “Machine Learning and Deep Learning Community” is a thriving community with a focus on machine learning and deep learning. This group provides a platform for members to discuss new research, share resources, ask questions, and connect with like-minded individuals. It serves as a valuable space for knowledge exchange and collaboration within the machine learning community.

Twitter: #MachineLearning

The hashtag #MachineLearning on Twitter serves as a gateway to a vast array of machine learning-related content, including research articles, tutorials, news updates, and discussions. By following this hashtag, users can stay up-to-date with the latest trends and developments in machine learning, connect with experts, and engage in conversations with fellow enthusiasts.

Data Science Central

Data Science Central is a popular online community for data scientists, machine learning practitioners, and data enthusiasts. It offers a platform for members to share their insights, ask questions, and access a wide range of resources related to machine learning and data science. With its active community and comprehensive content, Data Science Central is a valuable resource for individuals looking to enhance their knowledge and interact with industry professionals.

In conclusion, these educational resources offer a wealth of information and support for those seeking to understand new machine learning algorithms. Whether through books, online courses, tutorials, research papers, websites, YouTube channels, online communities, blogs, conferences, or social media groups, there is a wide range of options available to cater to different learning preferences and levels of expertise. By leveraging these resources, individuals can gain the knowledge and skills required to excel in the field of machine learning and stay informed about the latest advancements in the industry.

Case Studies On AI-powered Robotics In Healthcare

Discover the transformative potential of AI-powered robotics in healthcare. Explore case studies that highlight the impact on surgeries, patient care, diagnostics, rehabilitation, and drug discovery. Learn how AI robots enhance efficiency, accuracy, and patient outcomes. The future of healthcare is here.

In the rapidly advancing field of healthcare, the integration of artificial intelligence (AI) and robotics has emerged as a game-changer. This article presents a collection of case studies that highlight the transformative potential of AI-powered robotics in healthcare settings. From surgical precision to patient care and rehabilitation, these case studies underscore the significant impact of AI and robotics in enhancing efficiency, accuracy, and patient outcomes. By exploring real-world examples, this article aims to shed light on the immense possibilities and promising future of AI-powered robotics in the healthcare industry.

1. Robotic-Assisted Surgical Procedures

1.1 Benefits of AI-powered Robotics in Surgical Procedures

Robotic-assisted surgical procedures have revolutionized the field of healthcare, bringing numerous benefits to patients and healthcare professionals alike. One of the major advantages of AI-powered robotics in surgical procedures is the precision and accuracy they offer. By combining artificial intelligence and robotics, these systems can perform complex surgical tasks with utmost precision, minimizing the risk of human error.

Moreover, AI-powered robotics can enhance surgical outcomes by providing surgeons with real-time, high-definition imaging and 3D visualization of the surgical site. This allows for improved visualization of anatomical structures, leading to more accurate and efficient surgical procedures. Additionally, these systems can provide surgeons with haptic feedback, enabling them to feel the delicate tissues and structures they are operating on, further enhancing the precision of the procedure.

Additionally, AI-powered robotics in surgical procedures can lead to reduced postoperative complications and faster recovery times for patients. The minimally invasive nature of robotic-assisted surgery results in smaller incisions, leading to less pain, reduced risk of infection, and shorter hospital stays. This allows patients to recover more quickly and resume their daily activities sooner, resulting in improved quality of life.

1.2 Case Study: Da Vinci Surgical System

One of the most well-known AI-powered robotic systems in surgical procedures is the Da Vinci Surgical System. This system, developed by Intuitive Surgical, has been widely adopted in various surgical specialties including urology, gynecology, and general surgery. The Da Vinci Surgical System consists of robotic arms controlled by a surgeon who sits at a console, manipulating the instruments with precision and precision.

The Da Vinci Surgical System offers numerous advantages over traditional surgical techniques. Its robotic arms are equipped with highly flexible and precise instruments, mimicking the movements of the surgeon’s hand. This allows for enhanced dexterity and maneuverability, making it particularly useful in performing complex procedures that require intricate movements. The system’s 3D visualization and magnification capabilities provide surgeons with a clear and detailed view of the surgical site, aiding in accurate and precise surgical interventions.

1.3 Case Study: Smart Tissue Autonomous Robot (STAR)

Another remarkable AI-powered robotic system in surgical procedures is the Smart Tissue Autonomous Robot (STAR). Developed by researchers at the Children’s National Health System, STAR is designed to autonomously suture soft tissues, making it a valuable tool in surgical procedures such as intestinal and vascular anastomosis.

STAR operates by utilizing computer vision and machine learning algorithms to identify and track the tissues it needs to suture. The robot’s robotic arms delicately handle the tissues, making small sutures with millimeter-level accuracy. The autonomous nature of STAR enables it to perform suturing tasks without direct human intervention, freeing up surgeons to focus on other critical aspects of the procedure. This not only reduces the workload for surgeons but also minimizes the risk of human error, resulting in improved surgical outcomes.

2. AI-powered Robots in Caregiving

2.1 Enhancing Patient Care and Assistance

AI-powered robots have made significant strides in the field of caregiving, providing valuable assistance and support to both patients and caregivers. These robots can perform a wide range of tasks to enhance patient care, including monitoring vital signs, assisting with daily activities, and providing companionship.

By leveraging artificial intelligence, these robots can analyze and interpret patient data in real-time, alerting healthcare professionals of any abnormalities or changes in the patient’s condition. This enables early detection of potential health issues, allowing for timely intervention and medical treatment. Additionally, AI-powered caregiving robots can assist patients with activities such as medication reminders, meal preparation, and mobility support, promoting independence and improving the overall quality of life for patients.

2.2 Case Study: PARO Robotic Seal

The PARO Robotic Seal is a prime example of an AI-powered robot in caregiving. Developed by the Japanese company AIST, PARO aims to provide therapeutic benefits to patients, particularly those suffering from dementia or other cognitive impairments. The robot resembles a baby seal and is designed to respond to touch and sound, providing interactive and emotional support to patients.

PARO utilizes AI algorithms to learn and adapt to individual patient preferences and behaviors, creating a personalized and engaging experience. By interacting with PARO, patients experience reduced stress and improved mood, which can have positive effects on their overall well-being. The robot’s presence also helps to alleviate feelings of loneliness and isolation, fostering a sense of companionship and emotional support.

2.3 Case Study: Mabu Personal Healthcare Companion

Mabu Personal Healthcare Companion, developed by Catalia Health, is another innovative AI-powered robot in the caregiving field. Mabu is designed to engage and educate patients, particularly those with chronic conditions, in their self-care journey. The robot utilizes natural language processing and machine learning algorithms to have meaningful conversations with patients, providing them with educational information, medication reminders, and emotional support.

Mabu’s ability to engage patients in interactive conversations helps to promote adherence to treatment plans and encourages patients to take an active role in managing their health. The robot can also collect data on patients’ symptoms and treatment responses, providing valuable insights to healthcare providers for personalized care and intervention. By empowering patients and providing continuous support, Mabu enhances the caregiving experience and contributes to improved patient outcomes.

3. Robotics in Diagnostics and Imaging

3.1 AI-powered Robots in Radiology

AI-powered robots have revolutionized the field of radiology, improving the accuracy and efficiency of diagnostic imaging procedures. These robots leverage artificial intelligence algorithms to analyze medical images and assist radiologists in detecting abnormalities, making diagnoses, and creating treatment plans.

By combining the expertise of radiologists with the computational power of AI, these robots can quickly and accurately identify and analyze patterns in medical images, including X-rays, CT scans, and MRIs. This not only reduces the workload for radiologists but also improves the accuracy of diagnoses, leading to more effective treatment strategies.

3.2 Case Study: Early Cancer Detection with AI

One notable case study in the application of AI in diagnostics is the early detection of cancer. Researchers have developed AI algorithms that can analyze medical images to identify early signs of cancer, improving the chances of successful treatment and survival. These algorithms can detect subtle changes in cellular structures and identify potential tumors or abnormalities that may be missed by human radiologists.

By using AI-powered robots to assist in the analysis of medical images, radiologists can significantly reduce the time required to review and interpret images, allowing for faster diagnosis and treatment initiation. Moreover, AI algorithms can continuously learn from vast amounts of medical data, improving their accuracy over time and contributing to the development of more sophisticated diagnostic tools.

3.3 Case Study: Robot-Assisted Ultrasound

Robot-assisted ultrasound is another area where AI-powered robots have made significant advancements. These robots can perform ultrasound examinations with precision and consistency, aiding in the diagnosis of various medical conditions.

By combining robotic technology with AI algorithms, these robots can autonomously manipulate the ultrasound probe, ensuring consistent imaging quality and reducing the risk of operator-dependent variability. This results in more accurate and reliable ultrasound images, facilitating the diagnosis of conditions such as cardiac abnormalities, liver diseases, and musculoskeletal disorders.

4. AI-powered Robotics in Rehabilitation

4.1 Enhancing Physical Therapy and Rehabilitation

AI-powered robotics have shown great potential in enhancing physical therapy and rehabilitation programs. These robots can assist patients in their recovery journey by providing targeted exercises, monitoring progress, and offering real-time feedback and guidance.

By utilizing AI algorithms, these robots can customize rehabilitation programs based on individual patient needs and capabilities. They can accurately track patient movements, detect deviations from the prescribed exercises, and provide corrective feedback to optimize rehabilitation outcomes. Additionally, AI-powered rehabilitation robots can adapt the difficulty level of exercises in real-time, ensuring that patients are appropriately challenged without risking injury.

4.2 Case Study: Robot-Assisted Stroke Rehabilitation

Robot-assisted stroke rehabilitation is a prime example of the application of AI-powered robotics in the field of rehabilitation. These robots can assist stroke patients in regaining mobility and functionality by providing intensive and repetitive therapy sessions.

These robots utilize AI algorithms to analyze patient movements and adapt the rehabilitation program accordingly. They can provide real-time guidance, ensuring patients perform exercises with the correct technique and range of motion. By continuously monitoring patient progress, these robots can adjust the intensity and complexity of exercises, promoting gradual improvement and optimizing recovery outcomes.

4.3 Case Study: Lio Intelligent Walking Assist Robot

The Lio Intelligent Walking Assist Robot, developed by Panasonic, is an AI-powered robot designed to assist individuals with walking difficulties. This robot provides physical support and stability to individuals with limited mobility, enabling them to regain their independence and improve their quality of life.

The Lio robot utilizes AI algorithms to adapt to individual walking patterns and provide personalized assistance. By analyzing sensor data and monitoring body movements, the robot can provide the appropriate level of support and guidance, reducing the risk of falls and promoting safe walking. Additionally, the robot can continuously collect data on walking performance, allowing healthcare providers to monitor progress and tailor treatment plans accordingly.

5. AI Robotics for Drug Discovery and Development

5.1 Accelerating Drug Discovery Process

AI-powered robotics have significantly accelerated the drug discovery process, revolutionizing the field of pharmaceutical research and development. These robots can perform high-throughput screening of large libraries of compounds, enabling the identification of potential drug candidates with enhanced efficiency and speed.

By utilizing AI algorithms, these robots can predict the molecular properties of compounds and assess their potential for therapeutic activity. This enables researchers to focus on promising drug candidates, saving time and resources. Moreover, AI-powered robotics can analyze vast amounts of data from scientific literature and databases, facilitating the identification of novel targets and therapeutic approaches.

5.2 Case Study: Atomwise – AI for Drug Discovery

Atomwise is a leading company utilizing AI for drug discovery and development. Their AI-powered platform utilizes deep learning algorithms to analyze and predict the binding affinity of small molecules to target proteins. This enables the identification of potential drug candidates with high accuracy and efficiency.

By accelerating the drug discovery process, Atomwise’s AI platform has the potential to significantly reduce the time and cost required for developing new treatments. The platform screens millions of compounds in a fraction of the time compared to traditional methods, offering researchers valuable insights into potential drug candidates.

5.3 Case Study: OpenAI and Drug Discovery

OpenAI, a research organization focusing on artificial intelligence, has also made significant contributions to drug discovery. They have developed AI systems capable of generating novel drug-like molecules with desired properties, paving the way for the development of new therapeutic interventions.

By utilizing deep learning algorithms, OpenAI’s AI systems can generate virtual libraries of drug-like molecules and predict their potential interactions with target proteins. This approach has the potential to greatly expand the scope of drug discovery by exploring novel chemical space and providing innovative solutions to complex diseases.

6. AI Robots in Mental Health Support

6.1 Assisting in Mental Health Treatment

AI robots have emerged as valuable tools in the field of mental health, providing assistance, support, and therapeutic interventions to individuals with mental health conditions. These robots can engage in interactive conversations, provide emotional support, and deliver evidence-based interventions, complementing traditional mental health treatments.

By leveraging AI algorithms, these robots can quickly analyze and interpret patients’ emotional expressions and responses, adapting their interactions accordingly. They can provide psychoeducation, deliver cognitive-behavioral interventions, and offer coping strategies to individuals struggling with mental health issues. Furthermore, AI robots can continuously learn from patient interactions, allowing for personalized and tailored support.

6.2 Case Study: Woebot – AI-based Mental Health Assistant

Woebot is an AI-based mental health assistant designed to provide support and interventions for individuals experiencing symptoms of anxiety and depression. Developed by Stanford University researchers, Woebot engages in conversational therapy, delivering evidence-based techniques such as cognitive-behavioral therapy (CBT).

By interacting with Woebot, individuals can receive support and guidance at any time, even outside traditional therapy hours. The AI algorithms behind Woebot analyze individual responses and adapt the conversational style and interventions accordingly. This personalized approach helps individuals develop coping strategies, challenge negative thought patterns, and improve emotional well-being.

6.3 Case Study: ElliQ – Aging Companion Robot

ElliQ is an AI-powered companion robot designed to assist elderly individuals in maintaining social connections, promoting mental stimulation, and preventing loneliness. This robot engages in interactive conversations, offers suggestions for activities, and helps individuals stay connected with their loved ones.

By leveraging AI algorithms, ElliQ can learn individual preferences and adapt its interactions accordingly. The robot can recommend engaging activities, provide reminders for important events, and facilitate communication through voice and video calls. By providing companionship and support, ElliQ aims to improve the mental well-being and quality of life of the elderly population.

7. Robotics in Telemedicine and Remote Care

7.1 Enabling Remote Healthcare Services

Robotics has played a pivotal role in enabling remote healthcare services, particularly in areas with limited access to medical resources and specialists. These robots can bridge the gap between patients and healthcare professionals by facilitating telemedicine consultations, remote monitoring, and virtual care delivery.

By utilizing AI-powered robotics, healthcare professionals can conduct remote consultations, perform physical examinations, and provide medical advice in real-time. These robots can be equipped with high-definition cameras, sensors, and diagnostic tools, allowing for accurate assessments and interventions. Additionally, AI algorithms can analyze patient data collected by the robots and provide automated triage and decision support, ensuring timely and appropriate medical care.

7.2 Case Study: InTouch Health – Telehealth Solutions

InTouch Health is a leading company specializing in telehealth solutions, utilizing robotics to deliver remote healthcare services. Their robots, such as the RP-Vita and the RP-Xpress, enable healthcare professionals to interact with patients remotely, ensuring timely access to specialized care.

The telehealth robots developed by InTouch Health can be remotely controlled by healthcare professionals, allowing for real-time assessments and interventions. The robots can navigate through healthcare facilities, perform physical examinations, and transmit vital data to healthcare professionals. By enabling remote consultations and interventions, InTouch Health’s robots contribute to improved healthcare access and outcomes, particularly in underserved areas.

7.3 Case Study: Ava Telepresence Robot

The Ava Telepresence Robot is another notable example of AI-powered robotics in telemedicine and remote care. This robot enables healthcare professionals to provide virtual consultations and interventions, bridging the distance between patients and specialists.

Equipped with a screen, high-definition camera, and speaker system, the Ava Telepresence Robot allows healthcare professionals to engage in interactive video consultations with patients. The robot can navigate through various environments, including hospitals and homes, providing real-time communication and medical advice. This technology enhances collaboration between healthcare professionals, facilitates access to specialized care, and improves patient outcomes.

8. AI-powered Robotics in Pharmacy Automation

8.1 Streamlining Medication Dispensing and Management

AI-powered robotics have revolutionized pharmacy operations, streamlining medication dispensing and management processes. These robots can accurately and efficiently handle medication inventory, dispense prescriptions, and ensure medication safety.

By utilizing AI algorithms, these robots can accurately identify and count medications, reducing the risk of medication errors. They can handle a wide range of medication packages, ensuring accurate dispensing according to patient-specific prescriptions. Moreover, these robots can integrate with electronic health record systems, facilitating medication reconciliation and enhancing patient safety.

8.2 Case Study: PillPack – Automated Medication Packaging

PillPack, an online pharmacy acquired by Amazon, utilizes AI-powered robotics to automate the process of medication packaging. Their robots can sort and package medications into individual sachets, ensuring accurate and timely delivery to patients.

By leveraging AI algorithms, PillPack’s robots can accurately identify and sort medications based on patient-specific prescriptions. The robots can handle complex medication regimens, including multiple medications and dosages. This automation improves medication adherence, reduces the risk of medication errors, and enhances the convenience of medication management for patients.

8.3 Case Study: TUG Autonomous Mobile Robot